March 09, 2006

Switching from Comcast to iTunes?

So, it looks like Apple is finally introducing some new ways to purchase video from the iTunes Video Store: the Multi-Pass and the Season Pass. In theory, if all of my favorite shows were offered on iTunes, I could simply subscribe to the appropriate multi-pass or season pass, and download all of my shows to my Mac using my Internet connection. Then, there would be no need for Comcast, my current cable provider. In theory, this should cost me less money, because instead of paying for cable TV and Internet, I can just buy Internet. But, let's try and figure it out.

My cable bill for regular, non-digital cable is right around $50 a month. For that $50, my MythTV machine is recording 2 current-events style shows (the NBC Nightly News and The Daily Show with Jon Stewart), 4 hour-long dramas, and 5 half-hour sitcoms.

Now, let's do some math. I am paying $600 per year for cable TV, and out of that I am watching 11 shows. So, the cost-per-show per-year comes out to $54. In order for iTunes to be compelling, it needs to beat that number. Apple hasn't announced the pricing for a season pass yet, but we can assume that it will be less than $48 ($2 per episode times 24 episodes). So, things are starting to look pretty good.

Unfortunately, things like the Daily Show are going to be more expensive, because you get a lot more than 24 episodes of that in a year. And in fact, the multi-pass for the Daily Show is $10 per month.

So, my math has to get a little bit more complicated:

9 season-pass shows * $48/season = $432

2 multi-pass shows * $120/year = $240

Total for a year's worth of TV on iTunes = $672

Only $72 more than Comcast. That's not too bad, when you consider that:

- There are no commercials in the video content served through iTunes (for now).

- The shows are all portable -- I can watch them on my PowerBook, or a video iPod.

- I would actually own the shows -- if I had enough local storage, I could save them all, and go back in time and watch whatever I wanted, whenever I wanted.

- Things like the NBC Nightly News are already available on the Internet for free, so it might be free on iTunes too.

- A season pass will hopefully cost less than buying each episode individually, which makes the above number look better.

- Not all shows have 24 episodes in a season (i.e. "Battlestar Galactica"), further making the above number look better.

Of course, the negatives are that the quality of the video isn't as good (the resolution is about a quarter the size of regular TV resolution) and the content won't be available until a day after it originally airs on broadcast TV. But I very rarely watch things in realtime anyway, so I don't think that will be too big of a deal for me.

So, I'm going to be watching the pricing for Apple's Season Pass content with interest. If it is really cheap, then I could potentially start saving some real money versus buying cable TV.

-Andy.

Technorati Tags: Apple, Macintosh, DVR, iTunes, Comcast, Video On Demand

December 23, 2005

Merry Christmas to Andy, from Andy

So, in typical "guy" fashion, I left all of my christmas shopping until very nearly the last minute. I managed to get everything done during the course of a four hour shopping sprint today, which is great. What is not so great (from my wallet's perspective), is that I was bitten by the "impulse purchase fairy", and picked up one of those new-fangled Nokia 770's at CompUSA:

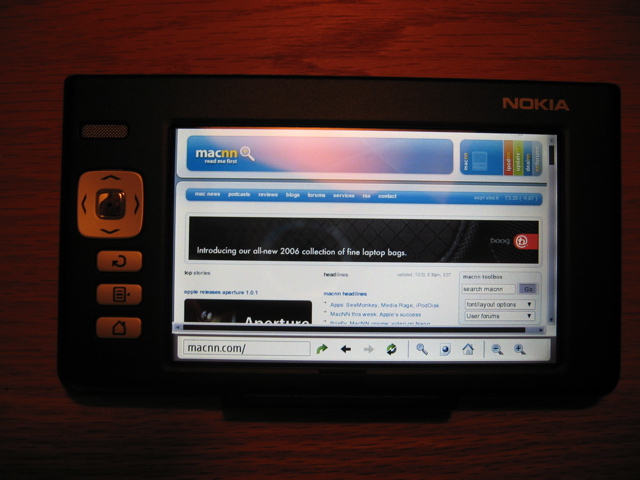

My initial impressions, after mucking around with it for a bit this evening, is that this little device is going to be a worthy investment. The screen is pretty amazing (as you can tell from how macnn.com looks), yet the device is super tiny and light weight.

Expect more nerdy ramblings as I play with my new toy.

-Andy.

Technorati Tags:

Nokia 770

December 03, 2005

DSL Downtime

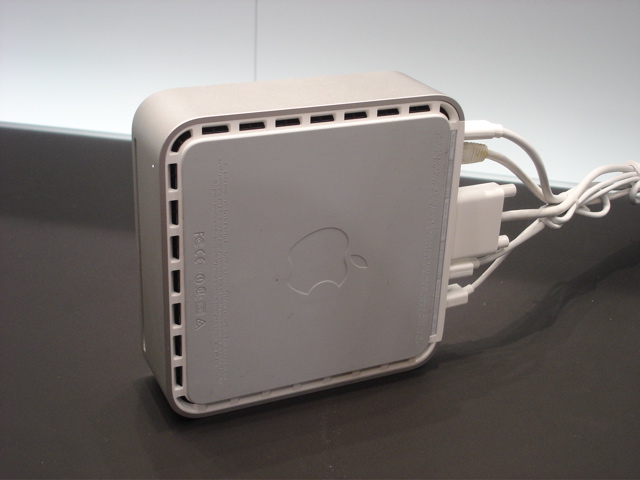

So, to add complication to everything else that is going on, Kevin's and my DSL connection went down last night. It all started a few weeks ago, when Kevin got onto his latest kick, which is to try and do his own server/MythTV/Linux box. I told him that if we wanted to do his own Internet thing, he could buy a cheap Ethernet switch, and peel off his own slice of Internet before it goes into my server/router/NAT/firewall, redefine.

And of course, because Kevin does everything that I say, he went out and bought an Ethernet switch, to go with the new machine. With DSLExtreme (our ISP), we have like 8 static IP addresses. So last night (before heading out to the movies with Pratima and Kalpana), I went on DSLExtreme's website, and added a second static IP address to our account, for Kevin.

And that is when the trouble started.

I think something like 10 minutes after I added that second IP, our Internet was down. Of course I didn't notice for a few ours, since I was out. And by the time I did notice, it was late, so I just went to bed, hoping it would be all better when I work up.

Well, it wasn't. So I called tech support today, and they told me they would check the line, and call me back in 30 minutes.

Well, they never called back. So, approximately 12 hours later, I called them back, but this time, from Illinois. After the tech support guy spent some time messing around, he said that there was a problem with the router (which I surmised on my own), and that he would call me back in 30 minutes after he escalated the issue.

This time, he actually did call me back, but unfortunately, it was an instance of "good news/bad news". The good news was that he fixed the router. The bad news is that he changed my IP address. This is bad, because I don't have any out-of-band way to get at redefine, in order change the IP configuration of my machine.

But luckily, as it turns out, I do have out-of-band access to redefine -- Kevin. Thankfully, he was at home, and I walked him through re-configuring redefine, and so now I am back in business.

Woo-hoo! Geek tax paid. Thanks Kev.

-Andy.

November 21, 2005

Upgrading my MythTV machine to Ubuntu Breezy

In the mood for inflicting pain on myself, I decided to upgrade my perfectly functioning MythTV machine, which was running Ubuntu 5.04 (Hoary), and upgrade it to Ubuntu 5.10, the Breezy Badger. I didn't have any really good reason to attempt this upgrade, except for a morbid curiosity as to how it would work. Or not work, as the case may be. After performing the upgrade, it seems like apt decided to wipe out all of the MythTV packages, instead of upgrading them to the newest version.After re-installing these packages, I found:

- The mythbackend process could no longer login to the MySQL database,

- The ivtv drivers for my Hauppauge PVR-250 TV capture card were not included in the new 2.6.12 kernel,

- The IR drivers for the remote control of the PVR-250 were not included in the new kernel, and

- The "mythtv-themes" package doesn't appear to be in any of the official Ubuntu repositories, rendering the mythtv-setup and mythfrontend programs un-runnable.

Fun!

-Andy.

November 20, 2005

Kevin's latest PC

So, ever since I bought my new iMac, this has brought "computer buying frenzy" to Sunnyvale. Kevin has upped the ante by purchasing two machines -- an iMac (for GarageBand), and a Sony of his owny (for Linux, Apache, and MythTV). The iMac hasn't arrived from Apple yet, so Kevin scooped up the Sony VGC-RC110G today, to start playing with that first:

In the Reitz family tradition, I made him take the machine apart before anything else happened with it. One of the reasons why Kevin chose this particular machine is because it is supposedly very quiet. Taking a look inside, this could certainly be the case. The 400W power supply has quite a large fan in it, which hopefully will spin at a lower RPM. The video card doesn't has only a heat sink (no fan), and the CPU has a heat pipe (potentially water cooled) combined with the biggest heatsink that I have ever seen (and I've seen the inside of the PowerMac G5). Sitting beside the heatsink is an even larger fan that what is in the power supply.

So, there is every possibility that this could be one quiet machine. I don't think it will be quieter than my iMac, but it will certainly be quieter than my Dell Precision Workstation 420 (which has at least one fan that is in some stage of going bad, so has been making quite an annoying racket for months now. But not annoying enough for me to fix it!).

Anyways, hardware-wise the Sony PC consists of an Intel 945P chipset (on an Intel-made motherboard), a Pentium D 830 (dual core Pentium 4 running at 3.0Ghz), 1Gb of RAM, and a 250Gb SATA disk. The machine also includes an ATI X300 PCI-Express video card (which can probably be made to work in Linux), and a Sony "Giga Pocket" video capture card. This card doesn't appear to be supported under Linux, but I found that it has a Conexant CX23416-22 chip on it, so getting it to work under Linux might just be a possibility. I am encouraging Kevin to work with the folks behind the ivtv project to see if this can be made a reality. I think it would be a nice story to take a piece of hardware that initially only worked with Sony's proprietary TV capture software, and now has been expanded to work with Windows Media Center Edition, work in Linux.

Expect more updates on that, and Kevin's general progress with this machine, in the coming months. For now, there is a gallery of photos available for your enjoyment.

-Andy.

Technorati Tags: Linux, Sony, VGC-RC110G, Giga Pocket, MythTV

November 16, 2005

Goodbye Slashdot, hello digg

Late last week, I saw a link called "digg vs. slashdot" posted to O'Reilly Radar. Curious, I skimmed the article, and then checked out digg. At first glance, it didn't really grab me -- but then I read the "about" blurb, and found that is like Slashdot + Wiki, and got intrigued.

O'Reilly has also come through with a link to a BusinessWeek article about digg, and I'm sold. I have been pretty unhappy with Slashdot for awhile now -- duplicate postings, low signal-to-noise ratio in the comments, etc. I have never even bothered to get a Slashdot user account, because I just don't see the point. I have never bothered to add the site to my RSS reader, and I have gotten down to checking it only a few times a week.

But all along, the basic problem with Slashdot hasn't been the site itself -- but rather it's editorial approach. And it is really looking like digg is fixing that, in a social software, "let's harness the collective intelligence of everyone", sort of way.

Which I really like. So, check out digg!

-Andy.

Technorati Tags: Slashdot, digg

November 03, 2005

Distressed about DRM

I read a fantastic article over at Ars Technica about the MPAA's latest attempt to add insane DRM to all of our lives. Basically, the giant content conglomerates are so afraid that people might see or hear their content without actually paying for it (gasp!), that they are going to great lengths to coerce government to coerce hardware manufactures to make devices that coerce consumers into playing by the rules. And of course, the rules are going to be written by the content conglomerates, so they will be more restrictive and draconian than ever.Whenever I read an article like this, I find it to be really distressing. I think that if the MPAA were to succeed in all of their goals -- trying to consume content would become not only expensive, but annoying as well. I think that the more the content conglomerates try to crack down, the more people are either going to either:

- Turn the TV off,

- Turn to piracy (because as we all know, these restrictions won't stop the pirates), or

- Turn to small content producers, who are thrilled when anybody consumes their stuff.

The optimist in me hopes that we'll have this sort of cheap and fair-use friendly content in the future, but currently, my inner pessimist is winning out.

-Andy.

November 02, 2005

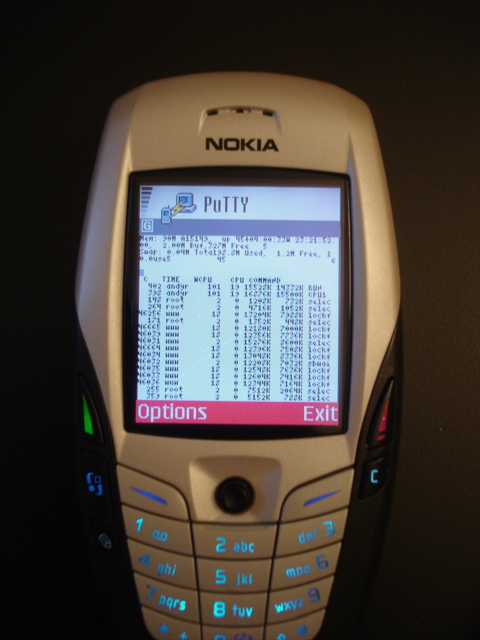

PuTTY on my cell phone

A few weeks ago, one of the high priced Tibco consultants that we had in the office mentioned that it was possible to obtain an SSH client for my Nokia 6600 phone. At the time, I didn't do anything about it. But I had some free time tonight, so I decided to do some research. And in fact, he was right -- some crazy folks have ported PuTTY to the Symbian OS, which is what my phone runs.

Behold!

Because I am buying the all-you-can-eat GPRS Internet plan from T-Mobile, I was able to SSH directly from my phone, through T-Mobile's network, through the Internet, to my FreeBSD machine that was about 20 feet away. That's sweet!

The screen and keyboard (specifically, the lack thereof) make this whole thing rather impractical. But I was able to run top, and even my most-favorite of all text editors, joe.

So, I think I have definitely scored myself a little geek toy that I can show off in the appropriate settings.

-Andy.

October 15, 2005

Playing with FreeBSD 6

So, I picked up a spare desktop recently at work, and I have finally wound down enough of current development/deadlilne stuff to play with it. I decided to install FreeBSD on this machine, to serve as my sandbox for playing with hot new Open Source softwares (like Wikis, social bookmarking apps, etc.). My sub-goal, however, is to play with FreeBSD. My personal webserver in my apartment has been running FreeBSD 4.x for years, but I have kindof "lost track" of current developments in FreeBSD.

To that end, I installed FreeBSD 6.0 beta 4 (which I had on CD), and used cvsup and "make world" to upgrade to the just-released FreeBSD 6.0 RC1. So far, things have been quite smooth. FreeBSD detected all of the hardware, and for being not-yet-finished, things seem as stable and polished as ever.

Due to the fact that Ubuntu Breezy came out the other day, I configured X and Firefox on the FreeBSD machine, so that I could use it today while my main Ubuntu desktop was upgrading itself. The only problem that I had was convincing my Logitech USB trackball to make the "scroll" button emit a "middle click" in FreeBSD. This works like a charm on Ubuntu, but with FreeBSD, I had to do some hacking. I tracked the mouse management stuff to a daemon called "moused(8)". It seems like the problem that I had was that by default, my mouse was emitting button 4, but I wanted it to emit button 2 (middle mouse button). So, I found the -m option in the man page, which looked like it would do what I wanted:

-m N=M Assign the physical button M to the logical button N. You may

specify as many instances of this option as you like. More than

one physical button may be assigned to a logical button at the

same time. In this case the logical button will be down, if

either of the assigned physical buttons is held down. Do not put

space around `='.

Unfortunately, the first 20 or so times that I tried this option, I couldn't get it to work right. The mouse buttons either didn't behave properly, or my button 4 didn't emulate the middle mouse button. Finally, after much struggling, I re-read that passage very carefully. The option is N=M, but the text immediately following that talks about assigning M to N. Confusing!

But, I made the proper adjustments, and now all is well. I used FreeBSD running xfce4 all day today, and pleasantly enjoyed the experience. We'll see what Ubunty Breezy holds in store for me on Monday.

-Andy.

September 21, 2005

What is the best Windows RSS reader?

Without a doubt, the most frequently asked question that I receive when I evangelize blogging and RSS at work is "What is the best RSS reader for Windows?". Unfortunately for me, I do not have a good answer to this question, as all but stopped using Windows on a daily basis.

But, since 99.8% of EDS employees use Windows, and because I really want to promote blogging and RSS, I would like to have an answer to this question. So, with Google and Virtual PC by my side, I am going to list some popular Windows-based RSS readers in this post. Hopefully, this list, combined with the comments, will help me to arrive at an answer.

When I google for "best windows rss reader", I found the following:

- Directory of the Best RSS readers

- Wikipedia: free Windows RSS readers / commercial Windows RSS readers

- RSS Info (blogspace.com)

- RSS Quickstart Guide (Lockergnome)

Based upon the above research, it looks like the top RSS readers for Windows are SharpReader, FeedReader, and FeedDemon.

Well, I was hoping to be able to try a few of these out on Virtual PC, but I have spent an hour patching and installing the .NET 1.1 runtime on Windows 2000. So, I ask the Internet -- which of these readers is worthy of my recommendation?

-Andy.

September 16, 2005

Solaris finally has industrial-strength logfile rotation

In all of my years using Solaris, I have always thought that their solution for rotating the core system log files was a joke. The "utility" (I use the term loosely) that Solaris used to ship with was called newsyslog. It was an incredibly simplistic shell script, and wasn't really re-usable, in terms of being able to rotate log files other than /var/adm/messages and /var/log/syslog.For years, the Open Source UNIXes have included utilities for managing the system log files based upon a flexible configuration file. FreeBSD, for example, includes the newsyslog utility, which reads from newsyslog.conf, in order to decide which log files to rotate, and how they should be rotated. It's great, and I have thought for years that Solaris should have something like this too.

So, it was a pleasant surprise today that when I went to setup log rotation for Apache running on a Solaris 10 server at work, I found that Sun has finally beefed up this part of Solaris. Gone is the weak Solaris newsyslog, and in its place, we welcome logadm. It looks like this utility became available in Solaris 9, but it was news to me until today.

This logadm thing seems pretty nice -- it is super-flexible, in that it took care of all of the quirks around rotating Apache log files. One odd thing about logadm is that every time it runs, it re-writes the config file (/etc/logadm.conf for those of you keeping score at home) in place. For example, here are the two configuration lines that I wrote today:

The comments should explain some of the options. But the whole '-P' flag was added by logadm, after it ran. This flag tells logadm when it last successfully rotated the log file, so that it knows when it should rotate again. Kindof a nifty hack for performing that function, if you ask me.# -C -> keep 5 old versions around # -e -> e-mail errors to areitz@aops-eds.com # -p -> rotate the file every day # -z -> compress the .1 - .5 files using gzip. this means we don't need to # sleep before gzip. # -a -> gracefully restart apache after rotation /var/apache/logs/access_log -C 5 -P 'Fri Sep 16 00:24:48 2005' -a \ '/usr/apache/bin/apachectl graceful' -e areitz@aops-eds.com -p 1d -z 1 \ -R '/usr/local/bin/analog' /var/apache/logs/error_log -C 5 -P 'Fri Sep 16 00:24:48 2005' -a \ '/usr/apache/bin/apachectl graceful' -e areitz@aops-eds.com -p 1d -z 1 \ -R '/usr/local/bin/analog'

-Andy.

September 12, 2005

Hacking the Solaris partition table

It was our sysadmin's last day with EDS today, and as a result, I now have a systems administration aspect to my job. This means that lately I have noodling around with Sun hardware and software more than I usually do. What follows is the story of how I spent a good chunk of my afternoon:

Due to our local Jumpstart process not properly partitioning the two disks in the E220R that I was trying to build today, I was forced to take matters into my own hands, and fix things manually. Since I'm a hacker at heart, this didn't pose too much of a problem for me. What did pose a problem, however, is getting the E220R box into a position where I could perform surgery on the partition table. Even in single user mode, Solaris refused to unmount /var. Thus, I was forced to find some way to get a non-local version of Solaris running, so that I could perform my surgery.

To my knowledge, Sun doesn't ship any sort of Solaris recovery CD, not even on Solaris 10. Doing a quick Google, I found a few brave souls who have posted instructions for creating your own Solaris recovery CD, but I have things to do, and don't have the time necessary to craft my own CD from scratch. The trick that I know is to boot off of the Solaris install CD, and then break the install process before it gets very far. This can usually net you some sort of shell, which is usually mostly-sortof functional.

When I tried to do this today with a Solaris 10 CD, I found that once the installer started, it mucked with the TTY to the point that when I managed to break it, I couldn't see any characters that I typed, etc. In general, the shell that I got wasn't usable at all. So I tried again, and this time managed to break into the startup sequence before the installer launched, which provided a rather functional shell.

It really seems like Sun should make this easier, however, by providing some sort of bootable recovery CD. This is one of the "rough edges" that Solaris still carries with it, that the Open Source UNIXes have mostly smoothed over. Fortunately, because Sun has given Solaris the Open Source treatment, Sun doesn't necessarily have to provide such a CD -- the community could step up and do it. Another of the advantages of Open Source.

Anyways, after getting my E220R booted off of the CD, I was able to hack the partition table on the boot disk, run newfs, and have a machine with a preserved root partition, but enlarged swap and more importantly, enlarged /var. Mission accomplished, but only after considerable effort.

-Andy.

September 09, 2005

FreeBSD 6.0 Beta 4 first impressions

By virtue of our IT person announcing that he is leaving EDS, my responsibilities are expanding. While I have prior experience with systems administration, and I have been dabbling in that space while at EDS, I think I'm actually going to have to get more serious about it now.

To get myself acquainted with a Dell PowerEdge 1750 server that we have, I decided to install FreeBSD on it. Seeing as how I haven't been on the "bleeding edge" of FreeBSD for quite awhile now (my home machine is still on FreeBSD 4.10+), I decided to give FreeBSD 6.0 Beta 4 a whirl.

I'm pleased to say that so far, it has been great. The install was a snap (well, mostly because they are still using sysinstall, which I have used many times in the past). All of the server hardware was automatically detected, including the Ethernet adapter, the built-in LSI SCSI Raid, and the dual Xeon processors. In fact, it appears as if SMP is finally enabled in the generic kernel, so I didn't have to re-compile in order to enable the second CPU (that's hot).

Unfortunately, it doesn't look like I'll be able to roll FreeBSD into production -- nobody else on my team has ever touched FreeBSD, and I'm not getting the "eagerness to learn" vibe. So, my options are either Solaris/x86 or Linux, and I think I'm going to take the Solaris/x86 route. But, in the meantime, I'm going to try and play with the new FreeBSD as much as I can. When 6.0 ships, I'm going to have to take a serious look at making the jump on my home server.

-Andy.

August 30, 2005

After 230 days of uptime...

...I was forced to reboot my FreeBSD machine today. For the last several months, Kevin and I (mostly Kevin) have been noticing some terrible latency on our DSL connection, as high as 17,000 ms for the first hop. In the past, I have found that reseting our Westell CPE has fixed the problem. But when it happened again yesterday, the CPE reboot trick didn't fix the problem.

This really screwed Kevin over, who uses Microsoft Remote Desktop frequently, and planned to work last night. It was a mild annoyance to me -- with 17,000 ms latency, the web and e-mail were basically unusable. I tried calling tech support last night, but the 24 hour help line was closed.

So, when I woke up this morning, the first thing that I did was to call. Because I knew, that if I didn't fix this thing, I would have an all-out riot on my hands (from Kevin). After fighting with the technical support person for the better part of 40 minutes, we came to an impasse. He said that everything was working fine in the network, and I was saying that my FreeBSD / MacOS X combo was definitely not to blame.

I went to work with things still broken, and resolved to come home "earlyish", and hook my Mac directly to the DSL modem, and call again. That would be a scenario that is much more understandable for tech support, and then I could get some resolution. When I got home, I checked that the latency of the DSL link was still through the roof (it was). So, I hooked my Mac up, and checked again. And I'll be damned if things weren't fine. I surfed around, did a speed test, everything -- the thing was performing like a champ.

My faith in life shaken, I came to realize that my FreeBSD box was causing the problem. So, because I didn't have time to troubleshoot any longer, I rebooted it. And that fixed it. Argh!

The Geek Tax having been paid, I am hoping that this is not a sign of impending hardware failure. Or the apocalypse. Either of those two would seriously put me out, anyway.

-Andy.

August 21, 2005

Blog redesign phase 1: color

I have commissioned Sara to help me spruce up my blog. She isn't into the "coding" part of the web (HTML, CSS, etc.), so I told her to handle all of the graphics (the part that I am bad at), and I would take care of the coding. To that end, I picked up the 2nd edition of Eric A. Meyer's (CWRU alum) book, "Cascading Style Sheets: The Definitive Guide" from Powell's when I was up in Portland. Today, I used that to help me make mock-ups of the first step in the re-design process: choosing colors. So, I present to you Sara's three proposed color schemes: I'm not sure yet, but I think I'm leaning towards #2.-Andy.

August 09, 2005

Hacking Windows from Linux for Fun and Profit

I, along with the rest of my team at work, am attending Java WebServices training at a Sun facility all this week. At my workstation, there is an old Sun Ultra 10 and a Dell Precision Workstation 210. One of the computers is loaded with Windows 2000 Server, the other with Solaris 9 (you can guess which is which). I found that I couldn't login to the Windows server, so today I decided to have some fun. I brought a Ubuntu Linux live CD in with me, and managed to get the Dell running Linux.

Unfortunately, when Linux booted, I found that the network wasn't working. It appeared as if Sun wasn't running a DHCP server for the lab -- which was confirmed when Chirag plugged in his laptop looking for network. Looking at the Sparc box, I found that it was statically configured. So, I ping'd for a free address, and gave the Ubuntu box an IP on the same VLAN. But no dice -- Sun apparently has separated the Solaris and Windows boxen on different VLANs.

My next trick was to run tcpdump. Usually, by analyzing the broadcast traffic, you can sortof figure out what network the machine is on, and what the default gateway is. From there, you can pick an IP, and be on your way. Unfortunately, I was able to see broadcast traffic from quite a few networks, so it wasn't plainly obvious which network was "the one" for me. I did some trial and error, but I didn't get lucky.

So, the only way in which I could see was to somehow figure out what IP address the Windows install was configured with, and then re-use that IP on Linux. And since I couldn't login to Windows, the only way I could think would be to mount the NTFS partition on Linux, and then munge through the registry until I found what I was looking for.

And believe it or not, that is exactly what I did.

I found this MS document which explains all of the registry entries that MS uses for the TCP/IP stack in Windows 2000. Unfortunately, that document isn't 100% complete -- it focuses more on the "tunables" in the stack. However, it references a whitepaper, which had the details of where things like static IP addresses are stored in the registry.

With that information in place, all I needed to know is which file on disk houses the "HKEY_LOCAL_MACHINE" registry hive. This page told me where that file is backed up, which gave me a clue as to what I should search for on disk. In short order, I was poking around the "%SystemRoot\WINNT\system32\config\system" file. The Ubuntu Live CD doesn't appear to contain any sort of fancy hex editor, so I just used xxd, which I piped into less. I was able to search around in that output, until I found what I wanted, and got the Ubnuntu box onto the network.

In general, this sort of hacking that I didn't isn't all that novel. In fact, there is a book out now, called "Knoppix Hacks" (O'Reilly), which details similar sorts of hacks that can be done from Linux. But, I am glad to have stumbled onto my own such hack, because now I get to play with Ubuntu during training. :)

-Andy.

July 18, 2005

Damn you, Rushabh!

Last week, Rushabh started poking me about enabling SSL on redefine's webserver, so that we could post to our blogs securely. This has been on my TODO list for awhile, so I decided to start down this long, dark road on Saturday. After decoding much of the SSL certificate generation and Apache configuration crap that I needed to go through, I found out that the version of Apache that I was running didn't have SSL support compiled into it.

Drat.

So today, I uninstalled my old apache, and installed a new one that had mod_ssl compiled in. At first, everything was going swimmingly. I got Apache to agree that my new SSL-enabled config file was okay, and then restarted it. All was well, but SSL didn't work. I found that I had to use the 'startssl' instead of the 'start' parameter. And of course, after I figured that out, all hell broke loose.

To make a long story short, first apache wouldn't start. Some googling told me that mod_ssl rejiggers Apache's internal API, requiring all modules to be re-compiled. Great. After a tense half hour comprised of a lot of hacking (and apache getting random bus errors later), I managed to recompile all of the PHP crap, and now things appear to be stable.

whew

-Andy.

July 15, 2005

RUG: SLM317: Adding Value with Automated Trouble Ticketing

The focus of this talk is on improving incident management, more for resource failures than end-user requests. Focus of this talk is on SIM. In the past, auto-generated tickets haven't been correlated, and there has been duplicate tickets submitted by users. Technology of Event Manager and Help Desk is advanced that it is worth another shot. Help Desk has more automation capabilities, Event Managers more dynamic.

Central issue is that alerts say what physical resource is broken, not what service is affected. Not possible to automatically notify users.

Solution: In the CS tradition, insert another layer in between EM and Help Desk. This is SIM (Service Impact Manager). Event Management can reduce event flow (filtering, duplicate detection, enrichment, etc.). Correlation not required by SIM model. Needs work to define service model -- can use discovery to determine infrastructure & some config/topology, but need to define actual user-preceived services by hand. Can do master/child tickets automatically. List of services affected in ticket can be dynamic (as additional services go down or get fixed).

IDEA: event suppression? Change tickets that you cut in HD could have CI information in them, and that could then flow into EM, to automatically suppress alerts during change.

My summary: The idea of a SIM seems like a reasonable one. I didn't get a lot of details about BMC's product, so I can't say if that is something that I would want to see in our environment or not. But I think that there is a lot of potential in the EM/SIM/HD combo for doing automation (which is my bread and butter at EDS).

RUG: ATR521: Integrating your IT Management tools with BMC Atrium

BSM: aligning business with IT- requires business awareness and context across IT silos

- tools need service centric view instead of infrastructure centric

- ability to manage business services across entire IT infrastructure

- CMDB

- Shared service model

- Dashboards

- Open foundation for information sharing and process collaboration across BMC products and 3rd party solutions

- shared data repository, common user interface

- CMDB (Core BSM Data)

- Service model (business relevance) (Core BSM Data)

- web services and data access (common data abstraction layer)

- reporting -- aggregates (presentation layer)

- view -- management dashboards (presentation layer)

- Provide Service Model Editor (operates on CMDB -- where model is stored?), API for accessing Service Model

- Examples: SLAs, Change Management (determine which components affected are part of business service), asset management from service perspective

- In order to get Service Model Editor, have to buy SIM (today)

- SIM maps incoming events to service model, and correlates to CI

RUG: DEV562: Safely Modifying a Packaged Application to allow Upgrade to Future Versions

NOT basic philosophy for upgrades: re-do all customizations for new version; Want to try and make it more automatic (or at least, as automatic as possible)Automatic upgrades are possible, but there are some manual steps, and care must be taken.

The merge problem:

* original app -> vendor new version ->

|-> my updates to app -> NEW MERGED VERSION

- How do you make it so that to independent teams making changes, don't conflict with each other?

- Decide whether modification is really needed?

- Keep an eye on where development is going (vender and internal)

- Plan and follow process during upgrade

- Test and resolve conflicts

- Important to the business, or just legacy?

- Is it an add-on or built-in to the application?

- Add-ons generally safe, built-in is intrinsic to application, much trickier

- Know vision and direction of the application supplier

- consider the philosophy of the application

- more likely to be compatible as upgrades happen

- Develop a vision and direction around the application

- Do not repurpose fields (OK to add fields; OK to use existing fields for intended purpose; DON'T arbitrarily re-purpose fields)

- Do not change existing workflow (OK to add workflow; OK to copy existing workflow and change the copy [pick different name prefix]; OK to disable existing workflow [upgrade will re-enable] - only change allowed

- Do not change permissions (Add new groups/permissions, don't touch BMC's)

- All fields and VUIs of BMC forms will have IDS in reserved range

- During upgrade, will not modify or delete any field that is not a BMC field

- BMC is free to change definitions of fields they own (OK to add new groups and permissions; don't modify perms on BMC's groups)

- During upgrade will not modify or delete any workflow that is not BMC's

- BMC free to modify its own workflow in existing way

- generate an export file of all definitions in the AR system server

- can also backup at database level

- Available from developer site

- makes list of all disabled workflow (will need later)

- Helps you find what needs to be re-disabled after upgrade

- Run in import mode to do re-disabling for you

- records all permissions for your groups

- creates a file containing a list of all workflow/fields/forms in the system and the permissions assigned for specific groups

- developer community (soon)

- Restore disabled forms and permissions

- Restore views (which were exported before-hand) -- note that new fields will not show on view, and will have to be added manually

- History/change data will be preserved

- direct modifications to factory definitions, make again

- modified qualifications of any application table fields need to be restored

- you need to run a suite of tests for the application after the upgrade

At RUG 2005 today

I am attending the last day of the Remedy User Group (RUG) conference today. Much like I did for JavaOne, I plan to blog about each session that I attend. So, to all of my non-nerd readers: you have been warned.

-Andy.

July 11, 2005

iPhoto -> Gallery

So, I've been using iPhoto to manage my pictures ever since I got my first mac. And while I'm not always happy with it, iPhoto does allow me to at least keep track of the pictures that I'm taking with a minimal amount of effort. iPhoto really falls down when you want to export your photos to the web. I don't have .Mac, so the only other option is some canned HTML that looks kindof funky.

So, I have been using Gallery to fulfill my pictures-on-the-web needs for some time now. However, one pain point has been getting my photos from iPhoto into Gallery. Basically, I have been doing a lot of manual effort, which has consisted of exporting pictures from iPhoto, scp'ing them to my server, then manually importing them into Gallery. The whole process is slow, repetitive, and generally sucks.

I had been thinking about trying to make things easier via Automator, when I stumbled across the free iPhotoToGallery software. This software does exactly what I want -- it provides an easy-to-use interface for exporting my photos directly from iPhoto to Gallery, without any of the annoying pain in-between. It seems like this software is a little rough around the edges, but so far, it has been working for me.

To celebrate, I have posted two new galleries of pictures: July 4th pictures from Chicago, and pictures from my trip to Antioch last Friday.

-Andy.

June 30, 2005

JavaOne: TS-7208: JXTA Technology Beyond File Sharing

P2P makes sense now- more people (and machines) connected, more data at the edge

- more bandwidth

- more computing power

- physical addressing model (URLs, IPs)

- centralized DNS

- no QoS for message delivery

- optimized for point-to-point (limitations on multicast/anycast)

- topology controlled by network admin, not applications

- no search/scoping at network level

- binary security (intranet or internet)

- highly decentralized and reliable

- network protocol for creating decentralized virtual P2P network

- set of XML protocols, bindings for any os/language

- overlay network, decentralized DHT routing protocol

- mechanisms, not policies

- open source (want it to become core internet tech, wide adoption)

- www.jxta.org

- JXTA addresses dynamically mapped to physical IP

- decentralized and distributed services (ID, DNS, directory, multicast, etc)

- easy to create ad hoc virtual networks (domain)

- Brings devices, services, and networks together

- enables interactions among highly dynamic resources

- storage backup (321 Inc.'s LeanOnMe)

- Brevient Connect web conferencing

- grid computing - Codefarm's Galapagos

- SNS - social network application (most used P2P app in China)

- Verizon IOBI (trying to lower the cost of delivering content over the Internet)

JXTA status

- JXTA-Java SE (June 15th release 2.3.4)

- APIs and functionality frozen

- Quarterly release schedule

- full implementation of JXTA protocols

- JXTA-C/C++ (2.1.1)

- standard peer

- extended discovery

- linux, solaris, windows

- rendezvous support

- JXTA-Java ME (2.0)

- edge peer only

- CDC 1.1 compliant

- community: C#, JPython

- enhance ease of use and simplify network deployment

- enhance performance, scalability, security

- standardize specification further through public organization

JavaOne: TS-7318: Beyond Blogging: Feed Syndication and Publishing with Java

Starts with overview of RSS, including uses for RSS, news readers, etc. Then moves into some more technical details of an RSS feed. Shortcomings of feeds:- Users need one-click subscribe (no standards yet) - Safari RSS doing a good job

- 10% of all Feeds not well-formed XML

- Feeds can be lossy (don't poll often enough, will miss stuff)

- RFC 3229 (FeedDiff) can be used to address this

- Polling based -- traffic waste; HTTP conditional get and caching ease pain

- ROME serialization

- XML DOM serialization

- Template langauge: Velocity, JSP specification

- Plop it on a web server that supports conditional HTTP GET, etags, etc.

- OLD: XML-RPC based, ad hoc, simple: Blogger, MetaWeblog (most popular), Movable Type, WikiRPCInterface

- New: The REST based Atom Publishing Protocol

- Why not SOAP?

- Supports features of existing protocols

- plus administrative features (add/remove users, etc)

- spec still under development, will be finished soon

- resources exist in collections

- examples: entries, uploaded files, categories, templates, users

- Java library

- RSS/ATOM feed parsers and generators

- built on top of JDom

- Provides beans as API

- existing solutions were incomplete, stale, had unfriendly un-Java API

- simple to use, well documented

- single java representation of feeds

- pluggable

- widely used, got momentum

- Loss of information at SyndFeed level (abstract all feeds, lose special features of specific feed types)

- DOM overhead

JavaOne: TS-7722: The Apache Harmony Project

Harmony is a new project from the Apache Foundation, to do an Open Source J2SE implementation.Why now?

- Lot of interest from community, companies

- J2SE 5 is first version where JCP license permits doing OSS implementation of JVM

- Sun cautiously supportive

Motivations:

- Not going to fork Java

- Not going to add new and incompatible technologies

- too hard, OSS can't do it -- wrong, OSS is just license & community, says nothing about technology

- license of VM is a major deal to some people

- What about Mustang? Not Open Source.

- Enable widespread adoption of Java, without re-engineering

- Provide open and free platform for Linux and BSD communities

- Java is 2nd-class citizen on Linux, Mono making big inroads...

- Get at developing economies (like Brazil), that can't afford commercial licenses, or have government directive to embrace Open Source

State of OSS Java:

- Kaffe VM - borg of VMs (absorbs everything), focus on portability, but performance lags. Harmony is going to work with them

- GNU Classpath - Java class library; long-running; work with them, but licensing issues

- Jikes RVM - research VM; VM written in Java (little C and ASM for bootstrap); good performance characteristics

- ORP C/C++ research VM (Intel research); under Intel license

- GCJ - compile Java to native binary; lot Harmony can learn from them

- KVM - run Java bytecode on Mono (fast); not Harmony approach, but learn from them

- JavaL(?) - brazilian effort similar to Harmony

JavaOne: General Session: Java Technology Contributions and Futurist Panel

I got to James Gosling's general session over a half hour late this morning (the balance I had to strike between Caltrain's schedule and my sleep schedule). Here are some highlights:NetBeans has a new set of tools for developing code for mobile phones. Visual app. builder, can also deploy code to phone from NetBeans. Even cooler, if the phone supports the correct JSR, you can do debugging of the app. while it is running on the phone. Single step, breakpoints, etc. -- all over Bluetooth!

They did a demo of an UAV that is powered by real-time Java. The conclusions:

- RTSJ determinism critical for navigational control

- RTSJ JVM enabled significant productiivity gains over C++ (don't need to worry about low-level stuff like endianess, etc.)

- Continue to perform research on newer RTSJ implementations

Sun is putting out multimedia versions of all of the JavaOne sessions onto the Internet, free for all (including slides, audio, etc.). Allowing Open Source contributions of translated audio tracks? It seems like people can watch in English, and record their own track, in a different language.

Shifting gears, the second half of the general session is a panel, about the future of Java. On the panel: James Gosling, Bill Joy, Paul Saffo, Guy Steele, and Danny Hillis (Applied Minds, Inc.). This was just a general talk on a bunch of futuristic mumbo-jumbo -- I wasn't really engaged to the point that I extracted anything interesting.

June 29, 2005

Sun hardware: UltraSparc and V20z

I spent some time vendor pavilion at JavaOne today. One of the vendors that I spent some time pick on was Sun. In particular, I had hardware on the brain toady, and I managed to track down like, the one person at Sun's booth, who could speak about the present and future of the UltraSparc line. In addition, this fellow was there to talk about the V20z, so I asked him a few questions about that as well.

Regarding the UltraSparc, both the UltraSparc IV and UltraSparc IIIi are currently shipping. The UltraSparc IV has both CMT (Chip Multi-Threading, sort of like Intel's Hyperthreading, but better according to Sun) and multiple cores per CPU. It sounds pretty hot. Unfortunately, it seems like it is only going to appear in Sun's higher-end servers (5U and up), in high densities. The Sun person that I spoke to wasn't sure if it would ever materialize in a workstation, but was doubtful.

The UltraSparc IV is going after highly-parallelized workloads, as is the rest of the industry. However, my group at EDS is working with some applications that are stuck on Sparc, and aren't highly parallel. So, it seems like we're going to be using the UltraSparc III series for awhile. The good news is that the UltraSparc III is up to 1.6Ghz in speed now, which is not too shabby (for a RISC CPU).

Moving on to the V20z, I cut right to the chase on this one. i knew that the only reason to buy an X86-based server from Sun would be for the management features. Luckily, I was not disappointed. The V20z has two Ethernet interfaces for the purposes of management. Even better, once you configure an IP on the Ethernet, you can SSH to it, and get full access to the serial console (OS), or the internal management console! That sold me right there. In addition, the management Ethernet ports can act as a hub, which means that you can daisy chain a rack of servers together, and only take up one switch port for management. That is really, really cool. One thing is that you have to use crossover cables, because the management ports don't support auto MDI-X (while the main GigE interfaces do).

I don't really keep up with the state-of-the-art for PC servers, but I don't think that you can do SSH management of them. Sun is definitely kicking ass here.

-Andy.

JavaOne: TS-3340: Architecting Complex JFC/Swing Applications

Where is the Pain?- GUI creation and maintenance

- Threading issues

- Widget <-> model binding (n-tier application)

- Input validation

- All about frameworks -- buying, building, or using them

- OSS frameworks are ideal (great to have source code)

- building should be last resort

- Ideally, one framework would solve problem

- doesn't exist

- Two wannabees: NetBeans platform and Spring Rich Client Platform (RCP)

- Vibrant space (new projects popping up all the time)

- Doesn't address pain points for big apps

- lack of documentation (yeah, I agree)

- Inspired by Eclipse RCP and JGoodies

- Not released yet, lot of code in CVS

- not ready yet, but keep an eye on it

- JGoodies Swing suite ($$$) - has been released, some free parts

- SwingLabs - Sun's OSS collection of helper frameworks. Also not out yet.

- DRY - don't repeat yourself. Do something twice --> build framework

- Think Ruby-on-Rails (interesting web framework)

- Ruby-on-Rails was built this way, so it was a framework that was built in an interactive manner, only for functionality that was actually needed.

Solutions

- GUI builders, but with restrictions

- Use GUI builder to generate binary artifact (like XUL), user-modifiable code sits outside

- Load generated GUI at runtime, and manipulate it

- Provide standard contract for screen creation

- Introduce form abstraction (smaller than screen, larger than a widget)

- Both practices increase opportunities for reuse

- JFace has Window and ApplicationWindow classes

- Spring RCP defines Page and View abstraction that aligns nicely with Screen/Form concept

- Swing components are not thread safe -- only supposed to be accessed by AWT event thread.

- SwingWorker (new in Java 6, being back-ported to Java 5) and Foxtrot?

- Comega - experimental programming language from MS, used as a sandbox

- Concurrency is hard -- Servlet API is admired, because it is simple and single threaded, yet it scales up

- Because there is a container that does heavy-lifting of thread issues

- Container-managed Swing (Inversion of Control)

- Have container control object, and provide services for object

- Load and initialize screens on background thread

- Handle asynchronous population and action execution

- JFormDesigner - excellent GUI builder tool

- Matisse - next version of NetBeans

- FormLayout - 3rd party layout manager that kicks GridBagLayout in the you-know-where

- IntelliJ IDEA - IDE

What sucks about JavaOne

Two things that suck about JavaOne: it is nearly impossible to find power outlets for my PowerBook, and not all of the rooms have WiFi. OSCON puts JavaOne to shame -- nearly every room has a giant block of power strips, for the geeks to plug their laptops in. It is deplorable that JavaOne doesn't have this.

As for the lack of WiFi, I'm finding myself a bit surprised by this one. I'm finding that since I am blogging the conference this year, having the web available is necessary in order for me to sprinkle links into my posts.

-Andy.

JavaOne: TS-7302: Technologies for Remote, Real-Time, Collaborative Software Development

Collaboration Technologies- occurs within conversations, unlimited # of participants

- all messages to all participants

- conversations include multiple channels (conduit for information)

- collablets provide interface to channel

- software component for specific type of collaboration

- stateful within scope of conversation

- only know about their own channel

- uses XMPP (Jabber)

- message oriented XML collaboration

- web services approach to collaboration

- simple, just send XML messages using SOAP

- send messages over any transport

- can describe collablet API via WSDL, open to any web services client

Can go further, and share whole files or projects. In shared file, can have shared editing, sortof like SubEthaEdit. It will lock the portion of the file, make change, when lock times out, will propagate change to other users. Remote users can compile shared project, which will actually happen on source machine (to snag all dependencies).

It seems like the point of the above technologies is to make it easy to implement your own collablets, so you can build custom collaboration modules that suit your particular project or work environment. Very cool.

What about screen sharing (code walkthrough, remote peer programming)? I didn't see the speaker demo this, but it should be possible to make a collablet that does it.

Links:

- Developer collaboration is going open source at: collab.netbeans.org

- Public collaboration service: share.java.net

- Blog about remote developer issues: http://www.toddfast.com

Downside: need some sort of server to do it on Intranet, with Java Server Enterprise. Close to getting it working over any vanilla Jabber server (sweet!).

JavaOne: TS-5471: Jini and JavaSpaces Technologies on Wall Street

Jini is a tool for building SOA's. The Master/Worker pattern is a common one in Jini systems.Generic Virtual Data Access Layer (SOA)

- Distributed data, bring together (left joins?)

- Data is federated (many masters)

- Expose common Grid-like API for clients (JAR), or WebServices, or even JDBC

- Needs virtual data dictionary, metadata to describe data, to glue together

- Decompose data access into generic workers -- break down request/response into many sub-tasks

- Decouple SQL query generation from execution

- Distribute workers, get parallelism

- Parallelize transaction w/o losing FIFO ordering, and still getting 100% reliability

- Clustered JavaSpaces -- make several instances. Make reliable by replicating data in these instances.

- Build smart proxy that handles JavaSpace client requests, and distributes work into JavaSpaces cluster

- Automate deployment and restart of JavaSpace instances, using dynamic service-grid architecture -- gets you dynamic scalablity (apache httpd forking style)

- memory was expensive -- not anymore

- Bandwidth was a bottleneck -- not anymore (GigE, 10Gig)

- Commodity HW finally enterprise grade

- design grid applications incrementally with Jini and JavaSpaces

- from programmers perspective:

- how design application?

- how implement the design?

- too much talk about design, not enough about programming

- Good design always starts with something simple and evolves -- Jini and JavaSpaces make this easy: loosely coupled components, dynamic and flexible infrastructure

- Features can be added as needed

- master-worker pattern based compute farm

- A layer of abstraction over JavaSpaces API and Jini programming model

- Framework class design

- Decomposer - concrete class will decide what correct subtask size is

- Distributor

- Calculator (processor)

- Collector

- Task

- Result

- Communicator - communication and synchronization among compute nodes

- have to consider user interface for the programme

- How can we take POJO model and bring it to Jini/JavaSpaces?

- Get a lot of power in POJO approach, because we decouple from underlying system (be it Jini, J2EE, etc.)

- Spring can do remoting without API via exporters on server side, proxies on client side (talk to exporters)

June 28, 2005

JavaOne: BOF-9840: Make your java apps more powerful with scripting

These are rough notes, as I was only there for the first part of this BOF and it was pretty informal:expose scripting interface to your program. allows 3rd parties to write code that interacts with your code (think plugin). Develop features and addons more quickly & cheaply.

idea: add beanshell to existing application. Once in place, you can use beanshell to poke and prod it, and figure out how it works. Questions like: "What happens to app if I change this value?" are easy with an application that supports BeanShell.

to support scripting in an existing app, may need to provide:

- extra API to support scripting

- debugging support

- logging/diagnostic output

- CLI for interactive control

- Editing tools for recording/manipulating scripts (macro recorder)

JavaOne: BOF-9335: Scalable Languages: The Future of BeanShell and Java-Compatible Scripting Languages

Doing JSR for BeanShell, to make it standardized, and potentially part of Java proper (someday). Will give more visibility and participation. BeanShell being developed by small team, so this will expand resources. New in 2.0:- Full java syntax compatability

- performance: JavaCC 3.0 parser faster and smaller; caching of method resolution gives boost

- better error reporting

- Applet friendly (again) -- doesn't trip applet security; advantage of existing reflection-based implementation (do things w/o code generation)

- new features: mix-ins, properties style auto-allocation of variables (can use BeanShell as more advanced java properties file)

- Mix-ins: import java object into BeanShell namespace.

- Full java 1.4 syntax support (on all VMs)

- Some Java 5 features (all VMs): Boxing, enhanced for loop, static imports

- Core Reflection doesn't allow introspection into core types -- added this in BeanShell 2.0

- Generated classes with real Java types, backed by scripts in the interpreter.

- Scripts can now go anywhere Java goes.

- Expose all methods and typed variables of the class.

- Bound in the namespace in which they are declared.

- May freely mix loose / script syntax with full Java class syntax.

- Full java syntax on classes -- this, super, static, instance variables, and blocks. (no way to access superclass from reflection API)

- Full constructor functionality.

- Reflective access permissions (knocks out applets for the scripted classes)

- bugs

- javax.script (JSR-223) - will be a part of Java 6, powerful API for calling scripting languages from Java

- BeanShell API compiler - have persistent classes backed by scripts.

New BeanShell community site, includes Wiki (J2EEWiki). Wiki site is beanshell.ikayzo.org/docs. Subversion for source control.

JavaOne: TS-7725: J2EE 5.0 ease of development

J2EE specification doesn't go far enough -- need "helpers" in order to be productive and effective in order to produce a J2EE application. Certain "artifacts" are common, such as:- Generate entity beans from DB

- Using resources (JMS, JDBC, etc)

- Using patterns (service locator, etc) and Blueprints

- Provisioning server resources

- Verifying, profiling.

J2EE 1.4 free tools:

- Eclipse - Web Tool Platform (WTP) / J2EE standard tools (JST)

- NetBeans - 4.1 just shipped (May), full support for all J2EE whiz-bangs

- NetBeans can do one-click compile-assemble-startserver-deploy-execute (Run)

- Refactoring at J2EE level (class name change propagates to descriptors)

- Ant native (project in NetBeans makes build.xml). Good for nightly builds!

- Blueprints compliant -- what is this? Need to look it up. Looks like best practices for J2EE application layout.

- Debugging: hides crap from application server in stack trace. Monitor HTTP requests. J2EE verifier tool.

- Can get JBoss plugin for NetBeans.

- Wizards for making EJB calls, doing JDBC access, or sending a JMS message

- "The focus of Java EE 5 is ease of development"

- EJBs as regular Java objects (standard interface for inheritance)

- Annotations vs. deployment descriptors (dependency injections)

- Better default behavior and configuration

- Simplified container manager persistence

- Developer works less, container works more (app server)

- comments that guide code?

- alternative to XDoclet

- Syntax is to use '@' symbol

- only a business interface to work with

- XML descriptors replaced by annotations

Java EE 5 status

- specs still under expert discussion

- delivery date is targeted for 1Q 2006

- Many areas ready: API simplification, Metadata via annotation, dependency injection, persistence

- NetBeans 5.0 will be ready at same time

Tools mandatory for J2EE 1.4 development. Features of Java EE 5 make development easier, and will be further assisted by smart tools. I knew that there was a new version of NetBeans out, which I was intending to check out at JavaOne. Also, it looks like there is a new NetBeans book out -- "NetBeans IDE Field Guide" which is good, because I don't like the documentation for NetBeans...

JavaOne: new format

I'm going to pick up a bit of a new format. I'm going to try and blog about the parts of the presentation that are interesting to me, getting away from a full outline of the talk. I figure that the slides can probably be found online somewhere, so I'm going to focus on what my take-aways are.

-Andy.

JavaOne: TS-7159: Java Platform Clustering: Present and Future

Research goal is transparent extension of Java programming model and facilities to a clustered environment. More for performance, than failure issues.Design Approaches:

- Cluster-aware JVMs (potentially optimal [close to hardware], but loses portability)

- Compile to cluster-enabled layer (ex. DSM; good performance, but lose portability and impedence mismatch)

- Systems using standard JVM (transforms at code or bytecode level). lose performance but get portability

- Hyperion - compiles to C code that is DSM aware

- Jackal - violates Java memory model

- Java/DSM (Rice, 1997) - piggybacks on existing DSM, no JIT

- cJVM (aka trusted JVM) (IBM, 1999) - proxy objects for non-local access (smart), no JIT

- Kaffemik (Trinity college, 2001) - based on kaffe VM, scalable coherent interface, JIT

- Jessica2 (2002) - JIT support, thread migration

- dJVM (2002) - based on Jikes

- JavaParty (1997) - pre-processor + runtime compiles to RMI. Requires langauage change

- JSDM (2001) - supports SPMD apps, not full Java technology

- JavaSplit - bytecode transformation, integrated custom DSM

- J/Orchestra - application partitioning, bytecode transformation

The executive summary is that there is some work going on to make Java cluster-aware at the VM level. I'm not sure why Jini wasn't mentioned more, since it seems like a natural fit for clusters. If I ever need to do some clustering, I can check in on the above projects to see if there is a fit.

JavaOne: TS-5163: XQuery for the Java Technology Geek

What is XQuery?- New language from W3C

- Queries XML (documents, rdbms, etc.)

- Anything with some structure

- under development, not 1.0, at candidate recommendation stage

- XSLT - easier to read and write, maintain; designed with DB optimization in mind

- SQL - better for hierarchical data (things that don't fit: book data, medical records, yellow pages). DB is designed for columns of numbers.

- Procedural - define what you want, let engines optimize

- When pulling data out of XML, easier to show more context around the data

- Like breadcrumbs to book, chapter, section

- Then show not only the search term, but also the content around it

- depends on engine, indexed stores require pre-loading

- Mark Logic (presenters, demos available), eXist (OSS), Saxon

- Coolest hidden XQuery implementation: Apple's Sherlock

- a matching language to select portions of an XML document

- Like RE engine for XML; "give me every one of these where that or this"

- pronounced "flower"

- stand for: for, let, where, order by, return

- this is one expression, not five

- XQuery is technology to manipulate XML that you can find with XPath

- XQuery doesn't have to produce XML output -- can produce sequence of elements, or just plain text

- Works well on web tier

- Executes in response to HTTP requests like CGI

- Speaks XML to back-end, XHTML to front end

- advantage is easy-and fast, can do blog or searchable FAQ as XQuery (backend is XML, XQuery formats and displays on frontend)

- Call XQuery stack from Java language

- Think JDBC but for XQuery

- Fits in your Java technology stack

- XQuery JSP tag library

- send results straight out, or store in variables

JavaOne: TS-7949: The New EJB 3.0 Persistence API

Got to this one late (lunch), so this is not full notes.Extended Persistence Contexts

- Rescue the stateful session bean from obscurity

- natural cache of data that is relevant to a conversation

- allows stateful components to maintain references to managed instances instead of detached instances

- A conversation takes place anytime a single user interaction spans more than one request

- sometimes helpful to capture conversation in object(s), can optimize, manage lifecycle, etc.

JavaOne: TS-7695: Spring Application Framework

Agile J2EE: where do we want to go?- Need to produce high quality apps, faster and at lower cost

- cope with changing requirements (waterfall not an option)

- need to simplify programming model (reduce complexity rather than hide with tools)

- J2EE powerful, but complicated

- Survival: challenges from .NET, and PHP/Ruby at low end

- Concerns that J2EE dev is slow and expensive

- Difficult to test traditional J2EE app

- EJB's really tie code to runtime framework, hard to test w/o

- Simply too much code, much is glue code (I concur, based on my experience with a J2EE portal in EDS)

- Heavyweight runtime environment -- in order to test, need to deploy

- frameworks central to modern J2EE development

- frameworks capture generic functionality, for solving common problems

- J2EE out of box doesn't provide a complete programming model

- Result is many in-house frameworks (expensive to maintain and develop, hard to share code)

- Responsible for much of the innovation in the last 2-3 years

- Several projects aim to simply development experience and remove excessive complexity from the developer's view

- Easy to tell which ones are popular; driven by collective developer experience (refined, powerful, best-of-breed)

- Tapping into "collective experience" of developers

- Inversion of Control/Dependency Injection (sophisticated configuration of POJOs)

- Aspect Oriented Programming (AOP)

- provide declarative services to POJOs

- can get POJOs working with special features, JMX, etc.

- hollywood principle - don't call me, I'll call you

- framework calls your code, not the reverse

- specialization of Inversion of Control

- container injects dependencies into object instances using java methods

- a.k.a. push configuration -- object gets configuration, doesn't know where it came from

- decouples object from configuration from environment. Values get pushed in at runtime, so it is easy to run object in test or prod.

- configuration requires no container API

- can use existing code that has no knowledge of container

- your code can evolve independently of container code

- easy to unit test (no JNDI stub, or properties files to monkey around with)

- code is self-documenting

- shared instance, pooling

- lifecycle tied to container objects

- maps, sets, other complex types

- type conversion with property editors

- instantiation via factory methods

- FactoryBean adds level of indirection; configured by framework, and returns objects based upon that configuration.

- paradigm for modularizing cross-cutting code (code that would otherwise be scattered across multiple places)

- think about interception

- callers invoke proxy, chain of interceptors decorate that call with additional functionality, callee finally invoked, and return passes back through chain

- idea for transaction management and security

- enabling technology, for defining own services, and applying them to POJOs.

- think of it as a generic way similar to how EJB implemented

- can be implemented with dynamic proxies

- configure objects w/o invasive API

- OSS project, apache 2 license

- Aims:

- simplify J2EE development

- provide a comprehensive solution to developing applications built on POJOs.

- aims to address all sorts of applications, large banking, or small in-house

- easy to work with JDBC, Hibernated, transaction management, etc.

- consistency makes Spring more than sum of its parts.

- don't need to deploy, can all run from within IDE if desired.

JavaOne: TS-5958: Amazon Web Services

Terms:- AWS - Amazon Web Service

- ASIN - Amazon Standard Item Number

- Associate ID - pass this # into all AWS calls

- REST - Representational State Transfer

- support for industry standards

- remote access to data and functionality

- about getting direct access to guts of website

- APIs that give any developer outside of amazon programmatic access to Amazon's data and technology.

- Includes product information, customer-created content, shopping cart, etc.

- Legitimize outside access, site scraping sucks

- Third-party developers extend the Amazon platform

- Harness creativity of others

- SOAP API

- REST API

- XSLT transformation service - can apply transform to XML results before returned. Can build website with no physical template, just supply XSLT stylesheet, in order to build "virtual website".

- WSDL - documentation for schemas

- Tons of documentation & community outreach

- SOAP is standard, strongly typed, requires toolkit

- REST is convention, ad hoc, easy ramp-up, prototype in browser, really easy to use. Key-value pair based. Easy to script. Develop in browser.

- REST is about 80% used, SOAP other 20%.

- http://www.amazon.com/webservices

- developer blog: http://aws.typepad.com

- download documentation

- Amazon offers 3 different things via web services API

- Easy to use via Java

- WebServices is like an API for specific websites, allowing 3rd party developers to build new sorts of apps just like if you were to write an app for the Windows API.

JavaOne 2005

After a too-short recovery time from NYC, I am in San Francisco today, attending Sun's JavaOne conference. I am going to be trying to blog about each session that I attend, and then cross-posting my public posts to the EDS blogosphere. So, for my non-computery readers (you know who you are), you're going to want to ignore the next like 3 days or so.

-Andy.

June 23, 2005

Open Source vs. Commercial Source: where is it headed?

I read a great interview with Linus Torvalds the other day. The main thrust of the interview was questioning Linus as to where the Open Source vs. Commercial Source divide is ultimately headed. Pretty interesting stuff, and well worth a read.

I have been doing some thinking about this as well recently, as I try and evangelize Open Source at EDS. My thoughts are pretty similar to where Linus is at. Open Source is going to continue to commoditize certain things like OSes, browsers, and potentially even office suites. The key for Closed Source commercial vendors is going to be to stay one step ahead of the curve, and earn their revenue by innovating. People will pay in order to be at the cutting edge, the state of the art. And companies will pay for support. Those are the two spaces that I increasingly see commercial vendors playing in.

-Andy.

May 19, 2005

Google is really cool

A couple of things about Google have been bouncing around in my head lately, and it all came together with something that I read on Slashdot today. Microsoft's CEO Steve Ballmer made slashdot today, with is prediction that Google is a one-trick Pony, and as such will be dead in 5 years. Last week, I read an article by Robert X. Cringely, stating that the Google Web Accelerator is a portent of how Google will become a "platform". Thankfully, I don't think that either point of view is exactly correct.

While, it's probably true that if Google just sticks to search, Microsoft will be able to do to them what they did to Netscape, I don't think that is Google's game. I think that Google is looking to be a repository for accessing data. And the "platform" (if you can call it that), will be their API's, which allow 3rd party applications to interact with and add value to this data in their own ways.

Case in point: this Wired news article that I read the other day. It highlights several new applications that are making use of Google Maps in new and interesting ways. One of the applications that immediately grabbed me is something called HousingMaps, which combines apartment listings from craigslist with mapping information from Google. Go ahead and try it out -- it is super neat. But the reason why this application reached out and grabbed me is because this is something I could have really used the last time that I was looking for an apartment. With one click, I saw all of the current craigslist apartment listings as pushpins on Google's map. This is so awesome! And it is all made possible by the fact that Google's "platform" is eminently hackable and extendable by third parties.

Of course, the one thing that Microsoft touts over and over is that they provide a platform -- i.e. Windows -- which is a rich ecosystem for 3rd party developers to build their own applications, thus allowing the free market to serve customers in a way that no monolithic entity can. Well, guess what kids? Google can play that game too. And while I don't want to over-hype this (because hyping some company as a Microsoft-killer is a sure way to get them killed by Microsoft), I sure am keenly interested to see where this is going.

-Andy.

May 05, 2005

Finally!

My copy of Tiger finally arrived today (iWork came yesterday). My initial analysis: Tiger fixes iSync not working with my crappy Nokia 6600 cell phone, so that is worth the price of admission right there.

-Andy.

February 14, 2005

Comments disabled

I've temporarily disabled the ability to post new comments to all of the blogs hosted on redefine. The comment spam is getting pretty bad, and I need some time to regroup on a technical level, and come up with a different anti-spam solution other than a blacklist. I think that like Carl before me, I'm going to go with TypeKey. This appears to require MovableType 3.x, however, which requires both money, and time, since I can't use the FreeBSD ports collection to install it. Hmmm...

-Andy.

February 02, 2005

The Machine Marches On

Great stuff on wired.com today: "Hide Your IPod, Here Comes Bill". I read this article with a high degree of amusement. As the Microsoft machine marches on, taking over market after market, it is nice to see them stymied, as evidenced by their own employees. Microsoft employees tend to be a smart lot -- so if they are buying iPods in droves, then it seems like management should try and figure out why, instead of simply banning the practice.

From what I've read about the "PlaysForSure" program, it seems like Microsoft has solved a lot of the reasons why non-iPod mp3 players have sucked on Windows. So eventually, with this software in place, the non-iPods may start to take over the market (just like wintel PCs before them). But for right now, Microsoft has got nuthin'.

But meanwhile, the machine continues to march. I had a quick look at Microsoft's new "MSN Search" the other day, and at first glance, it appears to be a total Google rip-off -- at least from a UI perspective. It looks like the search results that it is returning still aren't as complete as Google's. But how long will it be before Microsoft can out-Google Google?

sigh.

-Andy.

The T-Mobile Tango

I wanted to have good Internet access while traveling abroad, both to keep on top of work, but also to keep in touch with my friends and family (and TV). Based upon the information that I had from other EDS employees who had gone to Germany, T-Mobile WiFi HotSpots were plentiful, but expensive. In fact, it is 2 euros for every 15 minutes -- 8 euros an hour. Computing the exchange rate is left as an exercise to the reader -- but suffice it to say, this is quite expensive. I did some research, however, and found that accounts on the T-Mobile HotSpot system in the USA can be used on T-Mobile HotSpots in Europe. The advantage, of course, is that in America (being the gluttons that we are), you can buy an "all you can eat plan" for a flat monthly fee. So, before leaving for Germany, I added T-Mobile's HotSpot service to my cell phone plan.

My first week in Germany, I was staying at a hotel that didn't have T-Mobile. The Wifi in the hotel was served by Swisscom, and there was no roaming agreement between Swisscom and T-Mobile. So, I didn't really try to use the T-Mobile service in Europe until last Friday, when I was at Frankfurt airport, waiting to go to England. And of course, it didn't work.

Over the weekend in London, I tried it twice more (both times at Heathrow), and was not successful in getting my account to work. So, I returned to Germany, tired and frustrated by the fact that my T-Mobile HotSpot account wasn't working. My second week in Germany, I am staying at a different hotel which is served by T-Mobile. So, I spent an hour on Sunday evening on the phone with T-Mobile, trying to resolve the situation.

I think that T-Mobile is just like any multi-national company. From the outside, it looks like one homogenous entity. However, internally, due to regional laws and other political reasons, it is really many different sub-companies. The support website for the T-Mobile HotSpot in Germany listed two different phone numbers. In addition, the website advertises that the support personnel speaks German, English, and Turkish. When I called the first number, the person told me (in broken English) that the english-speaking support personnel are only in Monday through Friday.

So, at that point, I was skunked. But luckily, I picked up a T-Mobile brochure when I was in London. That had the support number for T-Mobile UK. I called them up, and the helpful scotsman who answered wasn't able to help me, but he was able to give me the phone number for T-Mobile HotSpot support in the USA. Once connect to T-Mobile USA, I found that my account was locked?

Why was it locked you might ask? Because I reported my cell phone lost, and asked that my account be on hold. When I did this, I assumed that they would lock the cell phone account, but leave the WiFi account. But no, that isn't how T-Mobile works. I have one account, and they have one giant lock, and that is how it goes. So, I had to establish a new, separate account that was WiFi-only, in order to get on the 'net. Sheesh.