Tonight, I ducked out of work early and drove down to Palo Alto, to attend a tech talk at Facebook HQ. The talk was called "Running MySQL at Scale", and was delivered by members of Facebook's database technical staff. They apparently streamed it live, but it isn't clear if they will post an archive of the talk. After the break, I'm going to drop in some random notes that I took during the talk.

-Andy.

I captured some whole slides, and posted to Flickr.

Internally, Facebook's database group is divided into 3 separate teams:

- ops group (fix it now)

- perf group (fix it in a day)

- engineering group (fix it in a month)

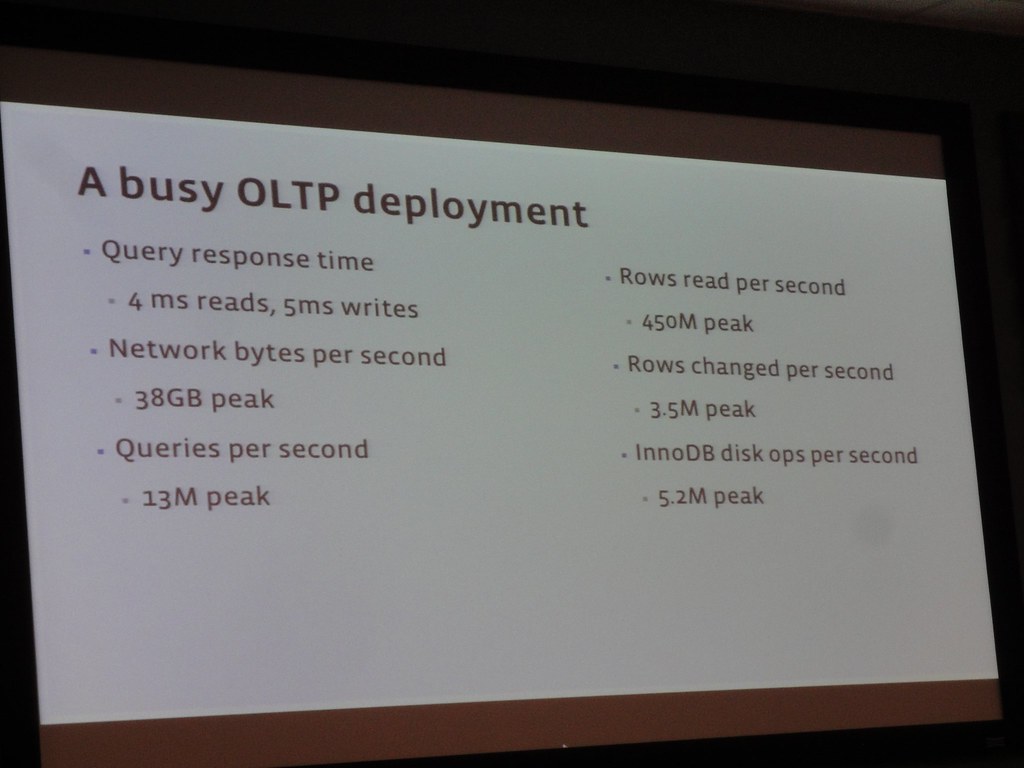

tend to be io bound, need to conserve disk reads

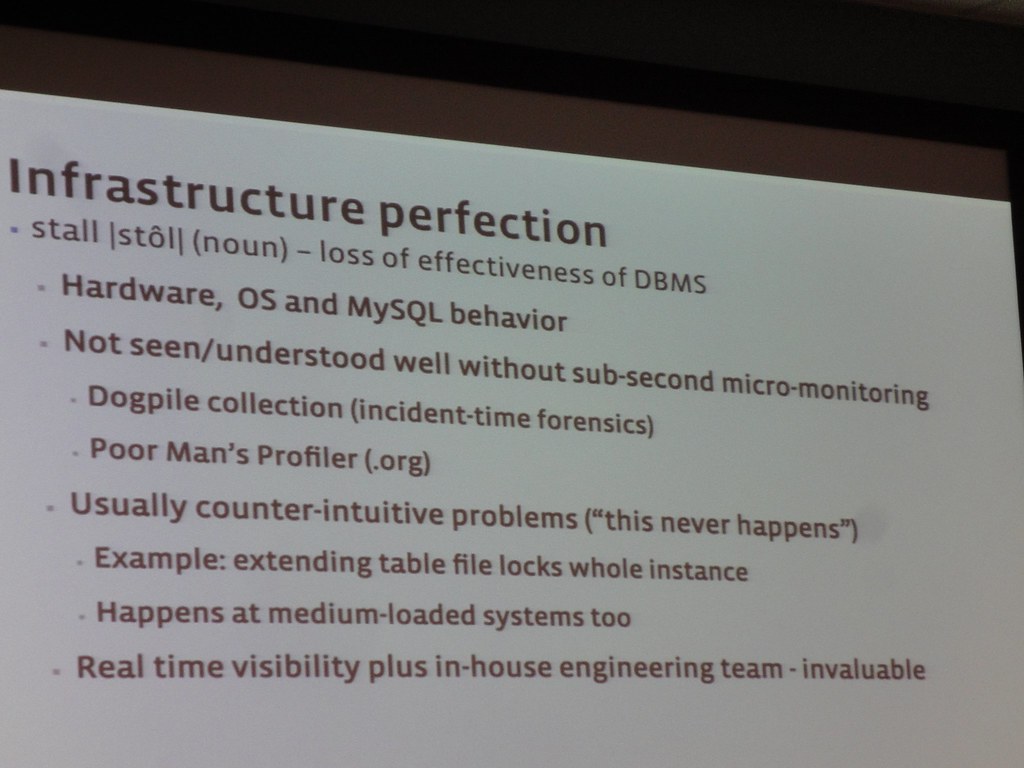

focus not on max performance, but eliminating instances of really bad performance. Use sub-second micro-monitoring, agent-based, that is triggered when agent notices a problem.

They frequently attach GDB's to running servers in order to get data about what MySQL is doing, and combine this with high-frequency monitoring scripts. They mentioned something called "Poor Man's Profiler" several times.

They have software that kills long-running queries automatically

pylander - load management tool (? couldn't find this in Google)

They mentioned a project called Scribe, which they wrote internally and open-sourced.

They also mentioned a Google project: openark kit

slave prefetching - allow replication to keep up

real-time binlog archiving. I tried to ask about this afterwards, but the answers were a little fuzzy. Basically, they try to get the binary logs off of the box as fast as possible, so that they don't loose too much data in the event of failure. The claim is that because the binary logs are sequential writes, that they don't worry about them too much from an I/O perspective.

Monitoring- macro - today vs. yesterday, or this week vs. last week

- Top-N worst performing hosts. Sharded instances.

- Extensive monitoring (often redundant) to dig into errors

They are running version 5.1.47 (engineering handles version upgrades)

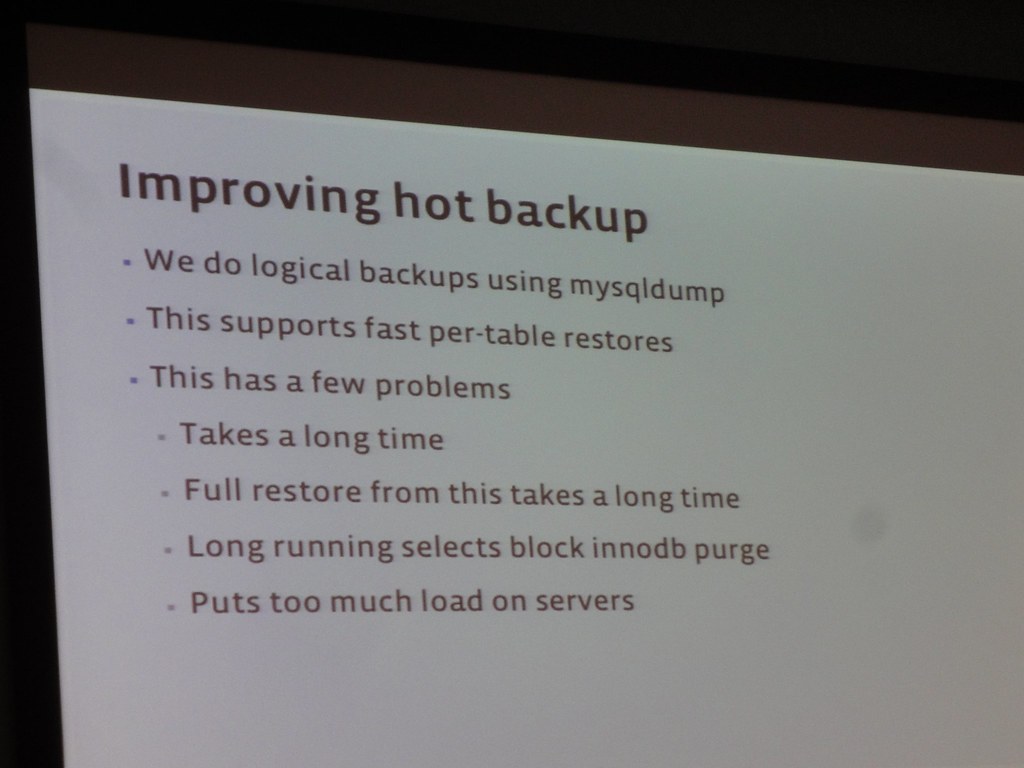

Challenges- incremental hot backup with XtraBackup (not allowed to have read-only views). Too much disk IO to backup each DB every day.

- Single threaded replication

- Servers with many cores and fast SSD. Make sure innodb can handle large io capacity

Several mentions of MariaDB.

Want stored procedures to update social graph (update node, increment counter). But too hard to maintain stored procedures across 1m db instances. Instead, cram multiple queries in one push to mysql connection.

They recreated The Way from Opsware, for doing distributed script execution. Using it to to go file-per-table, and to upgrade to mysql 5.1.47.

have a utility that generates my.cnf file based upon rpm of mysql that is installed

query comment has memcache key, helps them track down source of bad queries

Lots of appreciation for InnoDB, amazing piece of software engineering, has room to grow. Some note that about if you remove the SQL front-end, InnoDB can scale to an insane number of transactions/sec (more than memcache)

Moving slowly towards SSD, using it as caching tier (flashcache). Would love to put everything on SSD, just not affordable. The flashcache is like the buffer pool, but persists across mysql restarts. Don't cache reads for backup (mysqldump).

Run mk-slave-prefetch, not perfect, but helps to reduce the lag. Discussing improvements with percona guys.

in file per table, dropping or truncating a table will stall mysql. unlinks file, while holding lock open. fixed in facebook patch or mysql 5.5.

Public page for MySQL at Facebook