August 30, 2005

An ode to Marble Madness

Marble Madness is, quite possibly, the finest game ever created. Kevin and I have been playing this game with a near religious fervor, for the last two months. The shit-talking that goes on between us cannot be explained to others, who have not been touched by the greatness that is Marble Madness.

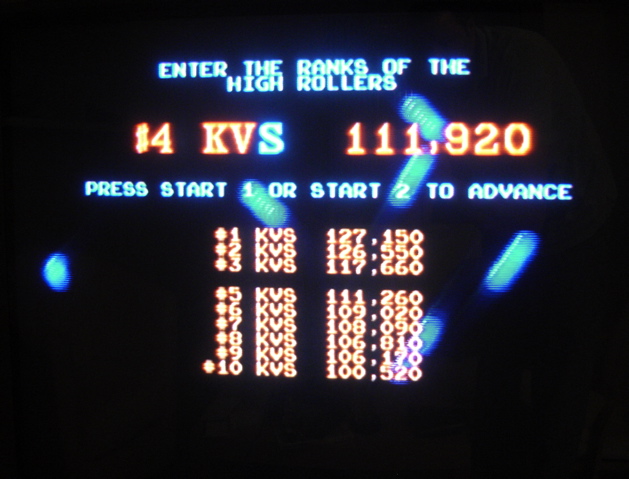

At first, Kevin and I were peers in this game, but in the last month, he has really eclipsed me in his craft at this game:

Having vanquished out all of my high scores long ago, he has now eliminated all of the sub 100,000 point scores.

The result of which, of course, is that I need to find more time in the day to play, so that my initials can re-join the high score list.

-Andy.

After 230 days of uptime...

...I was forced to reboot my FreeBSD machine today. For the last several months, Kevin and I (mostly Kevin) have been noticing some terrible latency on our DSL connection, as high as 17,000 ms for the first hop. In the past, I have found that reseting our Westell CPE has fixed the problem. But when it happened again yesterday, the CPE reboot trick didn't fix the problem.

This really screwed Kevin over, who uses Microsoft Remote Desktop frequently, and planned to work last night. It was a mild annoyance to me -- with 17,000 ms latency, the web and e-mail were basically unusable. I tried calling tech support last night, but the 24 hour help line was closed.

So, when I woke up this morning, the first thing that I did was to call. Because I knew, that if I didn't fix this thing, I would have an all-out riot on my hands (from Kevin). After fighting with the technical support person for the better part of 40 minutes, we came to an impasse. He said that everything was working fine in the network, and I was saying that my FreeBSD / MacOS X combo was definitely not to blame.

I went to work with things still broken, and resolved to come home "earlyish", and hook my Mac directly to the DSL modem, and call again. That would be a scenario that is much more understandable for tech support, and then I could get some resolution. When I got home, I checked that the latency of the DSL link was still through the roof (it was). So, I hooked my Mac up, and checked again. And I'll be damned if things weren't fine. I surfed around, did a speed test, everything -- the thing was performing like a champ.

My faith in life shaken, I came to realize that my FreeBSD box was causing the problem. So, because I didn't have time to troubleshoot any longer, I rebooted it. And that fixed it. Argh!

The Geek Tax having been paid, I am hoping that this is not a sign of impending hardware failure. Or the apocalypse. Either of those two would seriously put me out, anyway.

-Andy.

Scharffen Berger Chocolate Factory Tour

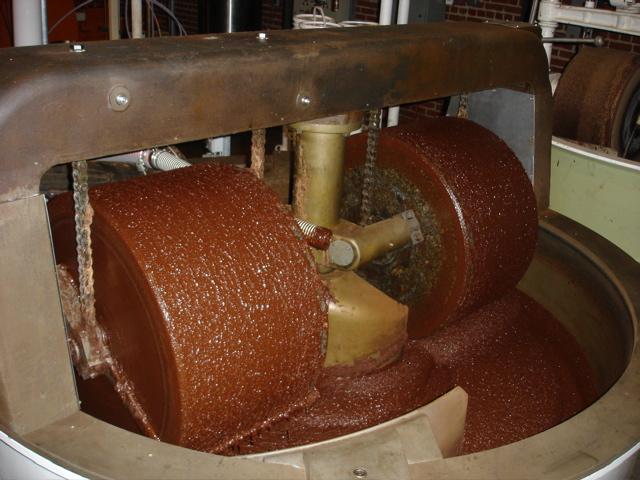

To celebrate Mike and Sheila's return to the Bay Area from South Africa, we went on a tour of the Scharffen Berger Chocolate Factory:

I can't remember the name of the machine pictured, but it uses granite rollers on two axes, to grind the cacau (a.k.a. cocoa) bits into a fine goo. It was one of the many machines that we saw on the tour, which was super-awesome by the way. I went there not planning on buying any chocolate, and of course, I ended up buying three bars.

I dig the fact that the break out the cacau content of their chocolate, and that they make a really dark chocolate (82%). Apparently, I am somewhat in the minority for liking dark chocolate, but hey, I knows what I likes.

All of the pictures that I took are up. This tour is definitely recommended if you are up in the Berkeley, CA area.

-Andy.

August 26, 2005

Still not invulnerable

So, generally speaking, my hands are feeling much better now after typing. The rest and exercise routine, combined with improved ergonomics at work, was successful. Most days, when I leave work, my hands feel fine. The only problems I have are when I use the laptop extensively (like when attending a conference), or when I am typing away from my ergonomic setup (like when attending off-site training).

But things still are not perfect, as I found today. We are getting into "crunch time" at work, and so I have been coding my little brains out for the last two weeks. Over the course of the last few days, in particular, I have really been going to town. Pretty much everybody has been busy with the visiting dignitaries that we have in the office this week, leaving me all alone to code. And while I have been greatly productive, I am finding that there is an upper-limit in terms of how much typing I can do in a day. A limit that I find, of course, during periods of heavy programming.

I'm hoping that once I get a proper ergonomic setup at home, and reduce my laptop usage down to near-zero, that I'll be able to type even more. But, I'll have to see how it goes.

-Andy.

August 21, 2005

Blog redesign phase 1: color

I have commissioned Sara to help me spruce up my blog. She isn't into the "coding" part of the web (HTML, CSS, etc.), so I told her to handle all of the graphics (the part that I am bad at), and I would take care of the coding. To that end, I picked up the 2nd edition of Eric A. Meyer's (CWRU alum) book, "Cascading Style Sheets: The Definitive Guide" from Powell's when I was up in Portland. Today, I used that to help me make mock-ups of the first step in the re-design process: choosing colors. So, I present to you Sara's three proposed color schemes: I'm not sure yet, but I think I'm leaning towards #2.-Andy.

August 18, 2005

Should probably be going to BAR Camp this weekend

I just found out about BAR Camp, the open alternative to FOO Camp. Normally, this is the sort of thing that I would be really hot on, especially since it is taking place in my back yard, but I'm just not feeling that motivated. Partly because I don't really have anything to demo and/or talk about. And partly because I just kind of want some "Andy time" this weekend. I have some stuff that I need to do that has been nagging at me for awhile, and I'm hoping that I'll be able to carve out some time this weekend in order to do it.

Hopefully.

-Andy.

August 16, 2005

New pictures posted

I have posted two new galleries: from my Birthday dinner last week, and from a short hike that I took with Stan and Anjali. Some of the hiking pictures came out really well, because Stan was driving the camera. His skills with the camera seem to be progressing a lot faster than mine -- but no matter, he's giving me some good tips that I can use in my photography.

-Andy.

Fall Out Boy's rising star

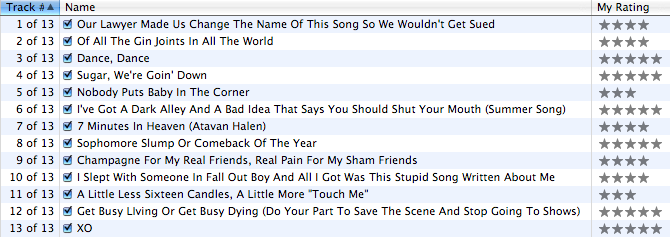

So, Fall Out Boy's new album, "From Under The Cork Tree", came out some time ago. I haven't had a chance to review it yet, so here is my review:

As you can tell from the indisputable iTunes star rating system, the new album is good. Very good. Having six 5-star songs on an album is pretty solid, especially based on how I rate albums. In general, the album doesn't break a lot of new ground from their previous element -- many of the same thematic elements are explored (Love. Loss. And of course, Movies), and the sound is as poppy as ever. But Pete's screaming has been toned down (to positive effect), and some guest vocals have been brought in (which are somewhat superfluous -- Patrick's vocals are one of Fall Out Boy's strongest assets).

But not only is the new album great, but Fall Out Boy's star is on the rise as well. Their first single, "Sugar, We're Goin' Down" is currently #3 on iTunes. They have performed on Conan O'Brien and Jimmy Kimmel's late night shows. Their video appears to be in frequent MTV rotation. I checked out "MTV's Weekend Dime" this weekend, and Fall Out Boy was not only the topic of VJ prattle, but the "Dance, Dance" video came out at #4 on the top ten list. And finally, they are up for a VMA.

So go and vote form them, and be amazed, as I am, that this little band is gaining such a mass following.

-Andy.

August 15, 2005

Zoey, 13 weeks

Snapped another picture of Chris and Tanya's (well, mostly Tanya's) puppy Zoey on Saturday:

With the number of pictures that I'm taking, you would think that she is my dog. :)

-Andy.

August 13, 2005

Ediri's wedding

Before OSCON, I flew out to balmy Pittsburgh, PA in order to take part in the wedding of one my friends from CWRU, Ediri Orife, to her fiancé, Mario Montoya. In short, I had an absolutely phenomenal time. I got into town on the evening before the wedding, and spent some time in the burgh with Akua. I got to meet some of her new friends, including Nef, Mia, Vic, and Ade. Food was eaten, sleep was not had, Bollywood was watched ("Mujhse Shaadi Karogi", which I have seen for the 2nd time now), and in general, much fun was had.

And then there was the wedding. It's hard to put down in words why, but this was one of the best weddings that I have been to. Just a tremendous combination of great people, good taste and design, great music, a wonderful setting, and being able to witness two families coming together in peace and harmony.

Ediri and Akua

I captured the event as best as I could with my still camera. And Akua has blogged about it, in terms of a wedding recap and a link to additional pictures. But suffice it to say, if you ever have the chance to go to a Mexican / Nigerian wedding, do not pass it up! :)

And I must extend massive thanks to Ediri for inviting me, and Akua for housing me as well as shuttling me to the airport (including at an ungodly hour).

-Andy.

"March of the Penguins"

Kevin and I went and saw "March of the Penguins" last night. And let me say this -- this movie definitely lives up to the hype. The cinematography is simply stunning -- I am amazed that the filmmakers were able to capture many of the shots that they did. And the Emperor Penguins are quite astounding creatures in and of themselves. It is impressive that any sort of creature is able to inhabit Antarctica (the coldest place on earth), much less thrive, like the Emperor Penguins appear to be doing.

The film itself is a documentary, but it isn't told in an over-bearing, instructional tone. Rather, it shows the plight of the Emperor Penguin in more of a story format. The film puts you in the place of the Penguins (as much as that is possible), to make a solid connection between the viewer and these amazing animals.

In short, I whole-heartedly recommend this movie. If you have a chance to see it, go for it!

-Andy.

August 12, 2005

Birthday dinner

I had my birthday dinner this evening, at a Chicago-style pizza place called Patxi's in downtown Palo Alto:

L-R: Kalpana, Pratima, me, Anjali, Kevin, Chris, and Tanya

The food was good (Chicago is still bringing the one true, however), and the company was excellent.

-Andy.

August 11, 2005

Today's stupid human trick

Today at work, we had a going away lunch for John (he announced this week that he is leaving EDS, his last day being Friday). The supplier-of-cake on our team (Beth), brought it some micro-cakes today, I guess as a combination of support for my birthday and John's going away.

Anyways, the cake that I selected was sortof like a tall cylinder. Finding the knife and fork method to be ineffective for this particular cake, I put my creative thinking hat on, and just went for it:

As an astonished Dung and Shreyas looked on, I was glad to be able to provide entertainment for my team. I am clearly not being paid enough for all of the contributions that I am making at the office. Or, I am quite possibly being paid too much. It could go either way, really.

-Andy.

August 10, 2005

Birthday

And so passes another birthday. Thanks to everyone who wished me well today, and big ups to my co-workers for buying me lunch and dessert today. And so begins, the last year of my twenties. I hope it's as good as all the rest have been.

-Andy.

August 09, 2005

Even I get grossed out sometimes...

I didn't think it was possible (nobody thought it was possible), but coffee is a hospitable environment for mold:

Let's give a big round of applause to my roommate Kevin, for making this all possible. :)

-Andy.

Hacking Windows from Linux for Fun and Profit

I, along with the rest of my team at work, am attending Java WebServices training at a Sun facility all this week. At my workstation, there is an old Sun Ultra 10 and a Dell Precision Workstation 210. One of the computers is loaded with Windows 2000 Server, the other with Solaris 9 (you can guess which is which). I found that I couldn't login to the Windows server, so today I decided to have some fun. I brought a Ubuntu Linux live CD in with me, and managed to get the Dell running Linux.

Unfortunately, when Linux booted, I found that the network wasn't working. It appeared as if Sun wasn't running a DHCP server for the lab -- which was confirmed when Chirag plugged in his laptop looking for network. Looking at the Sparc box, I found that it was statically configured. So, I ping'd for a free address, and gave the Ubuntu box an IP on the same VLAN. But no dice -- Sun apparently has separated the Solaris and Windows boxen on different VLANs.

My next trick was to run tcpdump. Usually, by analyzing the broadcast traffic, you can sortof figure out what network the machine is on, and what the default gateway is. From there, you can pick an IP, and be on your way. Unfortunately, I was able to see broadcast traffic from quite a few networks, so it wasn't plainly obvious which network was "the one" for me. I did some trial and error, but I didn't get lucky.

So, the only way in which I could see was to somehow figure out what IP address the Windows install was configured with, and then re-use that IP on Linux. And since I couldn't login to Windows, the only way I could think would be to mount the NTFS partition on Linux, and then munge through the registry until I found what I was looking for.

And believe it or not, that is exactly what I did.

I found this MS document which explains all of the registry entries that MS uses for the TCP/IP stack in Windows 2000. Unfortunately, that document isn't 100% complete -- it focuses more on the "tunables" in the stack. However, it references a whitepaper, which had the details of where things like static IP addresses are stored in the registry.

With that information in place, all I needed to know is which file on disk houses the "HKEY_LOCAL_MACHINE" registry hive. This page told me where that file is backed up, which gave me a clue as to what I should search for on disk. In short order, I was poking around the "%SystemRoot\WINNT\system32\config\system" file. The Ubuntu Live CD doesn't appear to contain any sort of fancy hex editor, so I just used xxd, which I piped into less. I was able to search around in that output, until I found what I wanted, and got the Ubnuntu box onto the network.

In general, this sort of hacking that I didn't isn't all that novel. In fact, there is a book out now, called "Knoppix Hacks" (O'Reilly), which details similar sorts of hacks that can be done from Linux. But, I am glad to have stumbled onto my own such hack, because now I get to play with Ubuntu during training. :)

-Andy.

August 07, 2005

OSCON: Wrap-Up

I had a great time at OSCON this year, and it is going to be a challenge to take all the of the technology and inspiration that I have after going to this conference and pour it into work. Some random notes:- why the lucky stiff put on quite an interesting show. Even though there were many glitches, I was highly entertained.

- Ruby, driven largely by Ruby-on-Rails, got the most buzz of the show. Driven by great speakers like David Heinemeier Hansson and the aforementioned lucky stiff, I was compelled to go to all of the Ruby talks that I could. I bought the Rails book online, and am currently trying to come up with a way to use Rails at work.

- Dick Hardt's talk was brilliant, and now I'm interested in identity management. I went to a LID talk, and that was a bust, so I think that I need to check out OpenID instead.

- This was a hard conference to blog. A lot of information comes at you pretty quickly, and it was hard for me to synthesize it all into what I wanted to write down. I tend to just copy down the slides, which is a pretty dumb thing to do, since they tend to appear online after the conference. So, I need to get better at listening, understanding, and regurgitating -- all in realtime.

- Planet OSCON was a huge success (IMO). It allowed me to keep track of what all of the other OSCON bloggers were saying, without getting noise from the entire Internet.

-Andy.

OSCON: Notes about the Linux Desktop

Well, OSCON 2005 is over. It's been over for like, two days now, so I guess I should come to grips with it. Seriously, my return to the Bay Area coincided with a Rushabh visit, so I have had "the busy". I have a few things that I want to note about the conference, however.

The keynotes on the last day were pretty good. In fact, most of the keynote speeches (aside from the vendor ones) were pretty good. I have to say that I like the new 15-minute format -- it tends to cut down on the speeches that seem last forever, and bring the focus down to the good stuff. Case in point, I really liked Asa Dotzler's talk about Linux vs. the desktop. I mostly agree with what he had to say, namely that there are some migration issues, and more importantly some usability issues, for people that are trying to come to Linux from Windows.

But more so than that, I think that there are issues of innovation as well: People need a reason to switch their computer from one OS to another. And while I think that increased security and cost are two super-great reasons to switch to Linux, I don't think that those reasons are enough to get people switch to Linux in droves. Most people don't think about security, nor do they think about the cost of Windows (it is bundled in the cost of the computer, so they don't really notice it).

Anyway, after Asa's speech, I was kindof bummed, because I think that making a usable interface is hard work -- requiring usability experts, who conduct studies of users, focus on the interface, and refine it until it is great. And I wasn't really sure how free software was going to be able to get this sort of work. Thankfully, the end of OSCON was capped by a great talk from Miguel de Icaza, who addressed these issues and more.

First, Miguel talked about the work that Novell is doing on Linux, including doing usability testing with actual users! Wow! I was wondering how such a feat would be accomplished in the Open Source world, and I see that funding has come in to make it happen. But what's more important, is that the methodology that they used could be duplicated and repeated by the community. All you need is two cameras, the right software, and a whole bunch of time. So, I am heartened to think that Linux and GNOME might actually, eventually "get there" in terms of usability.

Finally, Miguel ended his talk by showing off Xgl. I haven't been able to find a lot of information about this, but basically it is an X server that outputs to OpenGL. This means that you get massive amounts of hardware acceleration, which can be used to do cool UI effects. Miguel demonstrated wobbly windows (the windows wobbled like jelly when dragged), virtual desktops on a 3-D cube (ala Fast User Switching in OS X, but better), an Exposé-like feature (again, from OS X), and finally, real translucent windows. All in all, the demo was amazing. But even more amazing, the Linux desktop now has a solid platform, in which really innovative things can start to be created. And hopefully, it is in this innovation, that cool new features will be created, which will compel people to start switching to Linux in droves.

Or, if not to Linux, at least to MacOS X... :)

-Andy.

August 05, 2005

OSCON: Advanced Groovy

Groovy is a Java-friendly kindof language. Java strengths: lots of reusable software, components and tools. VM and Binary compatibility, eases deployment. Allows for innovation at the source code level, as long as it produces JVM-compatible bytecodes. We reuse more than we write -- most code written is glue, bringing components together. Scripting is much better suited to this purpose. The reason for another Agile Language is that they want to be able to reuse all of the Java components and architecture that exist.

Groovy has been around since 2003, JSR-241. Has features of Ruby, Python, and Smalltalk. Uses ANTLR and ASM Java compilers (?). Features dynamic typing, with optional static typing. Native syntax for lists, maps, arrays, beans, etc. They also have closures -- chunk of code that can be passed around as first-class object. Regular expressions are built-in (w00t!). There is also native operator overloading, so if you add two arrays, the result is a concatenation. Basically, strings, lists, maps etc. work like you would expect -- Groovy takes care of a lot of the heavy lifting, and makes these things much easier to deal with. Groovy also adds some nice helper methods and such to the JDK.

They have a '?' operator, that will test an object for null, and cause the entire expression to return null, instead of returning an NPE. Expando (groovy.util.Expando) is a dynamic object. Create an Expando with some properties, and you can keep chucking stuff into it, in a free-form manner.

Groovy supports "currying" for closures, which is a little strange. It seems like it is the |var| thing in Ruby. Basically, you can define a block of code, which has some variables in it that you don't know what will be at the time of definition. Later, you can call the curry() method, and pass those data elements in. Groovy will fill in the variables in order - each time you call curry(), it will fill in the first, second, third, etc. variables.

Demos - XML-RPC is one of the cool features of Groovy. In the demo, there is a "groovysh" command, which is an interactive shell into the interpreter, much like Python or Ruby offers. There don't appear to be any semicolons at the end of line in Groovy. The demo shows Groovy interacting with Excel (using a wrapper around WSH), and then a Swing demo. Actions are properties of buttons, which is nice. Groovy looks like it could simplify cranking out quick Swing GUIs.

Some Groovy extras: process execution, threading, testing, SWT, Groovy Pages/Template Engine, Thicky (Groovy-based fat client deliver via GSP), UNIX scripting (Groovy API for pipe, cat, grep, etc.), Eclipse plugin (needs more work).

Perfomance isn't as good -- 20 - 90% of Java. Development time is much less than Java, but debugging kindof sucks. Ready for small non-mission critical stuff right now.

-Andy.

August 04, 2005

OSCON: Ubuntu BOF

The Ubuntu BOF was pretty good. We had about nine people there, spanning seasoned pros like Jeff Waugh and newbies like myself. Since I called the BOF to order, I got to ask a lot of stupid questions, and luckily I got them all answered. What follows is some random notes that I scribbled down in a sticky:

Regarding repositories, my questions were around problems that I am encountering when I put in some quirky repositories (Multiverse and beyond). The problem is that the Ubuntu update thingy will look at all repositories, and start giving you packages from the non-stable area. Jeff recommended the following:- main, and universe repository - recommend only keeping the Ubuntu repositories active

- apt-get install pkg = version#, to force specific version

- there is some package pinning stuff that you can do in apt, but it sounds complicated

update-rc.d --> manage startup scripts in Debian / Ubuntu. All this does is to manage the symlinks, I think. In Debian, everything in /etc/rc2.d that is executable will be launched -- there is no funny business.

For questions about the mythtv packages on Ubuntu, I was told to IRC. On the Freenode servers, #ubuntu, I should talk to mdz (Matt Zimmerman) about the mythtv package. I suspect that there might be a bug in the startup sequence, so that mythtv-backend doesn't start properly on boot.

Debian documentation is installed with Ubuntu and applies to Ubuntu.- debian developer policy documents are good to read for questions about system startup, etc.

Ubuntu is building a linux hardware database - Ubuntu Device DB (system tools). Can report to central server. This allows the Ubuntu folks to press hardware vendors to support Ubuntu -- because they can back that need up with actual usage statistics.

Finally, someone told me that I can use "evolution --force-shutdown" whenever I want to restart Evolution cleanly, rather than just killing all of the processes. I'll have to try that the next time Evolution crashes.

OSCON: XMPP in Java

XMPP is an IETF standard now (happened last year). Great for building custom applications because it is simply streaming XML. Speaker knew of a company that built server monitoring infrastructure on XMPP (when a server went down, it sent an XMPP message to the appropriate tech).

Jive Messenger is the premier OSS Java Jabber server. Good performance, web-based admin console, just released version 2.2, and integrated with Asterisk -- so they have VoIP integration (closing in on unified messaging).

XMPP client libraries: Smack and JSO. For simplicity and ease of development, Smack is the best. Other libraries have extra bells & whistles.

OSCON: Inside Ponie, the Bridge from Perl 5 to Perl 6

A port of the Perl 5 runtime to the Parrot interpreter (used by Perl 6). Allows you to run un-modified Perl 5 code on Perl 6. The focus is on Perl 5 XS, which appears to be the Perl module standard. Hence, the idea is to be able to run the CPAN modules that use XS on Perl 5 on Perl 6.

Perl 5 VM:- Stack machine

- 300 instructions

- OP tree, not bytecode

- Never serializes these instructions out to disk (attempts to do this that have been bigger and slower)

Goal is to write and run Perl 6 code, run existing Perl 5 code, and run code that links to external libraries (XS). Call back and forth transparently. Pass data back and forth. Then we can convert Perl 5 code function by function to Perl 6 (nice).

After going through some problems with inline, the conclusion is that you need to have the code executing within one VM, with a single runloop. Can't compile Perl 6 code to Perl 5 -- VM not modern enough. Reverse is also hard -- Ponie provides another way. The Perl 5 language is defined by the C source (since there is only one implementation, and some quirks that aren't documented). There is a lot of complexity in how Perl 5 has been implemented, that must be bridged to get it working in Perl 6. There is a need to unify data storage, so Perl 5 data must be wrapped, so that it can be stored in Parrot.

Ponie is a big refactoring exercise. Work is linear -- each task depends on a previous task. Finding many bugs in the Perl 5 core. Fixing these, and making some improvements in Perl 5 core. What is still a little unclear from this talk, is how Parrot will actually work when it is finished. It looks like it will be integrated with Perl 6, and allow seamless execution of Perl 5 code, but I'm not sure. There might be an option to invoke it, or something.

-Andy.OSCON: Don't Drop the SOAP! Why Web Services Need Complexity

Talk is in praise of SOAP, but not to talk trash about REST. Simpler protocols are great, but SOAP has it's place as well. REST stands for "REpresentational State Transfer, and everything is based on URI. Relies on HTTP verbs (GET, POST, HEAD, PUT, and DELETE). Encodes parameters and details in the URI. Where REST is simple and straightforward, SOAP is complex and opaque. There is a basic spec for SOAP, as well as WSDL, WS-I, etc. XML-RPC is a third alternative, that sits in the middle -- encodes parameters and details in XML like SOAP, but uses HTTP POST like REST.

SOAP criticisms:- Too complex

- REST more scalable than SOAP

- SOAP based on new and untested technologies (WS-I, for example)

- REST more accurately models system by keeping strong relationship between URI and resource. Don't hide details in XML config files ("XML sit-ups", to quote DHH).

The pitch is that REST and SOAP are not in direct competition. It is more like C vs. Java -- each tool has appropriate applications. Complexity is a gradient, not a binary switch. XML-RPC has SOAP beat in terms of number of implementations/platforms (80 implementations across 30 platforms). XML-RPC has some limitations that aren't being fixed in the spec?

More about complexity. Don't confuse complexity with detail -- Amazon can provide a lot of detail based solely on the ASIN in a REST call. It seems like one of the limitations of REST might be if you have variable-length arguments. Creating a REST URI that can accept this sort of data could be complicated and/or ugly. Is that the point of this talk? No way to define a contract with REST, as opposed to SOAP.

On the whole, I still don't understand what the benefit of SOAP is. It just seems like a massive PITA, and REST seems so much easier. Hmm...

-Andy.OSCON: Customizing MacOS X with Open Source Software

DarwinPorts- Conceptually similar to FreeBSD ports

- Customization via "variants" system (unique to DarwinPorts)

- It's possible to patch and/or modify darwin code, compile it, and then layer MacOS X on top.

- Software Update will over-write such a system, however

- There are potential problems:

- Default compiler version (MacOS X ships with several GCCs)

- Many tools needed for the build don't come with OS X by default

- Environment variables

- Aliases

- 2.95.2

- 3.1

- 3.3 (compiler for Tiger OS)

- 3.5

- 4.0 (default in Tiger)

- The gcc_select command will switch the default around

- SRCROOT - where the source code lives

- OBJROOT - intermediate .o files

- SYMROOT - debug versions of binaries

- DSTROOT - final finished binaries

- MACOSX_DEPLOYMENT_TARGET - allows flagging APIs as deprecated

- RC_RELEASE - Tiger

- UNAME_RELEASE - 8.0

- UNAME - Darwin

- RC_ARCHS - ppc i386 (architectures that binaries should be compiled for)

- RC_ProjectName - Name of project being built

- Even more variables....

- Based on $RC_ProjectName

- Examples are DSPasswordServerPlugin, cctools (compiler), libc (& libc debug)

Need to download private headers. Apple really discourages the use of static libraries, to make the system more flexible, and easier to patch. Sometimes static libraries are needed by the system itself, so it is possible to build them. But Apple doesn't ship any static libraries by default.

Some internal tools are also needed: kextsymboltool, for example.

The good news is that DarwinBuild can track all of these potential problems, and can download all of the necessary bits to make compiling Darwin easy. It automatically sets up a chroot() environment, for example, so that build can be done without mucking with currently running system. You give DarwinBuild a release number (8C42, for example, which is 10.4.2), and it will fetch a plist file, which describes details of the build.

DarwinBuild can be used to build individual components, like bash for example. Just type 'darwinbuild bash', and it will fetch the latest sources, and do a build.

Next, we got a demo of using DarwinBuild to build a version of talk that has Bonjour support. Fairly nifty stuff. Using DarwinBuild, you can target specific releases of MacOS X -- building talk for a friend that is still running Panther, for example.OSCON: Hosting another BOF

I only had one person tell me that an Ubuntu BOF would be a good idea, but as it turns out, that is enough for me. I have signed up to do an Ubuntu BOF at 8:30 tonight (check the board for the room). Hope to see you there!

-Andy.

OSCON Speaker and Conference evaluations

The links to the online evaluation forms are hard to find. So, here they are: Speaker and Session Evaluation form, and the Conference Evaluation form.

-Andy.

August 03, 2005

OSCON: Supercharging Firefox with Extensions

Extensions are code that run in the browser just as if it was a part of Firefox. This talk is geared around "the trunk" (the upcoming Firefox 1.5). Popular examples:- AdBlock

- Greasemonkey

- BugMeNot

- FoxyTunes

- Many toolbars: Google, Yahoo, A9, Netcraft

- Install Manifest -- structure of extension, and where it should go in the Firefox system. Aids in deinstallation. No protection -- extensions can be written that don't cleanly uninstall.

- "Chrome" (User Interface components)

- Components (non-UI) - integrate with services, hook to other parts of system, etc.

- Defaults (Preferences)

- "Zippy" files: zip file with a .xpi extension.

- Can package traditional "heavyweight" plugins (flash, pdf reader, etc.) as Zippy files

- Don't need GUID's anymore for directory name for extension.

- install.rdf file needs to be in root of .xpi.

- Long topic; good resources for XUL development at developer.mozilla.org.

- Supplies UI controls, drag-n-drop, menus, toolbars, etc.

- Bound to actions through JavaScript

- You can do overlays, over Mozilla-supplied XUL, to plugin your own UI elements for the extension. XUL overlay can add, remove, or change elements in existing XUL.

- XUL is a document, which can be manipulated just like you would a DOM in DHTML.

- Site: xulplanet.com

- Chrome Manifest -- where to put overlay and where to find it.

- Install Manifest

- RDF/XML file

- Identifies extension

- Compatibility extension (allows to install in Thunderbird and Firefox, for example)

- Take existing file and modify it -- to hard to do from scratch. Or grab an RDF generator.

- Update information -- for update checking

- Save as install.rdf

- Can point firefox at a directory, so you don't need to repackage.

- Still need to restart browser to have it pick up new extension. But it seems like the browser will pick up code changes dynamically?

- If you turn off XUL cache, may not need to restart Firefox.

- Dialogs are in separate .xul files

- Functionality in .js files

- Use DOM methods to manipulate UI elements within XUL

- Use .css files to add images, set colors, change style, etc.

- Firefox supports XPCOM components in extensions

- This allows implementing code in JavaScript, C++ (Gecko SDK), etc. -- interacts with more of "the guts" of Firefox.

- Automatically registered by putting these files in components/ directory.

OSCON: Introduction to XSLT

This was a bare-knuckle, how-does-XSLT-work kindof talk. I think I was looking for a gentler introduction. Something that would be more motivating. Working through examples, showing a problem, and how XSLT elegantly solves said problem. Basically, I think that this talk needs some presentation Aikido. The presenter, Evan Lenz, really seemed to know XSLT backwards and forwards. And he prepared a nice quiz, which was a great way to work through some of the complexities of XPath. In addition, it fostered some group collaboration, in that we broke down into teams of two in order to go through the questions. All he needed in his presentation was more of an emphasis on case studies, real-world examples, and stories. He was basically trying to teach XSLT in the course of this tutorial. Which, if I want to learn a new language, isn't typically how I go about things.Some notes that I harvested from the presentation:

- Michael Kay's "XSLT Programmer's Reference", well-regarded text on XSLT.

- Eventually, the slides will be available here: http://xmlportfolio.com/oscon2005/.

- If XPath is about trees, XSLT is about lists. Populate arbitrary nodes from the source tree into lists. Iterate over those lists to produce result tree.

- XSLT

- XPath - language for addressing parts of an XML document.

- XPath is part of XSLT is part of XSL

- What does my XPath expression select?

- And what substitutions are being made as a result?

OSCON: Keep It Simple With PythonCard

PythonCard is good for building simple GUI applications- NOT HyperCard (that was original intent)

- More like VB (today)

Creating an application is quite simple. There is a GUI environment, which you use to create a new application. Cross-platform, runs on Windows, Mac, and Linux. Dependent on wxPython underneath, which means that Mac is the least mature of all platforms.

The GUI design environment (the resource editor) is quite VB-like. You can drag and drop form elements, text labels, etc. PythonCard has runtime tools, including debugging, logging, and watching messages. Makes it easy to figure out what is going on in the application. There is also a built-in code editor. And there is also a python shell window that is always available. The shell has code-completion, which seems nice.

Stand-alone installers exist via the standard Python means (PyExe and some other packager for the Mac).

-Andy.OSCON: Swik

Swik is "the free and open database of Open Source projects that anyone can edit". Trying to make better documentation for OSS projects. Also trying to make it easier to find OSS projects (solve the "which is the right project for me?" question).

For documentation, they looked into the Wiki concept. And because Wiki's are great, that is the route that Swik chose. Sounds like a simplistic Wiki -- they aren't using Textile or Markdown or anything.

For organization, they are pursuing tagging (ala del.icio.us). Don't need to worry about hierarchy or anything -- can just assign the tags that fit, and let the system sort it out. Tag analysis will afford another level of organization and capability for finding OSS projects (future).

Going back to documentation, didn't want to repeat documentation work that has already been done. RSS is the most popular way to get data about OSS projects. Aggregating RSS feeds for OSS projects? Also going after Web Services, for example using the freshmeat WS API to do integration with freshmeat.

From the demo, Swik looks very Google-like from the UI. Typing in a search term brings back a page that is a composite of several web services. Every project found builds a new record in Swik. This can be edited (Wiki-style), to add additional information, metadata (tags), etc.

I played around with Swik a bit, and it looks pretty cool. I added two projects, Unison and Joe, and it is pretty painless to get new data into the system. For the default homepage, it picks the Google "I'm feeling lucky" link, which I think is often wrong. The reason why, is because freshmeat doesn't publish the homepage for a project via their Web Service (and it might violate their TOS to spider it).

They are working on Open Sourcing Swik, and are soliciting comments about how to do it. Go to the Swik project on Swik to comment.

-Andy.

Any interest in an Ubuntu BOF?

A question for the OSCON blogosphere: is anybody interested in an Ubuntu BOF? Things that I think we could talk about:- Upgrades:

- How do I rollback patches that come out for Ubuntu?

- When should I reboot after applying a patch?

- When it comes time to upgrade to Breezy, how will I do it, and how will I ensure that my system doesn't blow up?

- Startup scripts: what is the chkconfig equivalent on Debian/Ubuntu?

- Documentation: aside from Ubuntu Guide, what other definitive documentation repositories exist? Is there anything like the FreeBSD Handbook?

- Where is the best place to go with Ubuntu questions?

-Andy.

August 02, 2005

OSCON: Yahoo! Buzz Game

Now someone from Yahoo! is talking about a joint project between Yahoo! Research and O'Reilly Research, the Buzz Game. Looks like a way to hedge bets as to what will be "hot" in the coming months. Hotness is determined by search volume on Yahoo! search. Looks kindof interesting. Been out since March, but this is the first that I have heard of it.

According to their stats, Windows XP and Internet Explorer are still gaining buzz over Linux and Firefox, respectively. Whateva!

-Andy.

OSCON: Larry Wall's State of the Onion

Some quotes from Larry:

- "As a child of the cold war, I know that seeing a mushroom cloud is a good thing -- it means that you haven't been vaporized yet."

- "You know, when you think about it, most Open Source software is written using other people's money."

- "Diversity in California is not only encouraged, it is required".

OSCON laxitude

Okay, this conference is now officially super laid-back. You are supposed to wear your OSCON badge on a lanyard around your neck. And helpful attendants are supposed to check it every time you want to enter a pay-for part of the conference.

This is all well and good -- it is how most conferences work.

The problem is, it seems like the checks are totally lax. I took my badge off when I went to lunch, and I just now realized that I forgot to put it back on. I have entered the room for the XSLT talk twice now, and got acknowledged by the security person on the second time.

Wow.

-Andy.

Planet OSCON is live

At last night's blogging BOF (which I hope to write more about later), I asked how people were finding those who were blogging about OSCON. While I was aware of the part of the OSCON Wiki where people were putting information on their blogs, I didn't want to have to groom through all of those URLs in order to get the appropriate RSS feeds into NetNewsWire.

When I posed this question to the group, Casey West suggested setting up the Planet Planet software. While that is in and of itself a good idea, out of the box, the planet software requires an admin to add RSS feeds to the configuration. So, Casey followed that idea up with the notion of gluing a simple CGI to planet, which people could use to add their own blogs to the planet configuration (Wiki-style).

Whelp, we all agreed that it was a good idea, and now Casey has turned around and created it. Awesome!

So, if you want to play along with OSCON at home, this seems like the most definitive way to do so. I whole-heartedly suggest that you check it out.

-Andy.

August 01, 2005

OSCON: Ruby on Rails: Enjoying the Ride of Programming

Rails works by convention. It generates directory structure, and some stub files. This doesn't limit flexibility, because you can over-ride everything. What matters more is convention (where you put the braces, etc), not what the convention is.

Code/setup configuration do via generate command is the pathway into the generation engine in rails. You can create your own generators, to generate Rails apps that have your own custom elements (like authentication, etc). Use "./script/generate" to make a controller. Rely on convention that by default, controllers you create are exposed in URL based on their name (instead of having some nasty XML config file that makes this correlation). Based on how you refer to URL, some actions are assumed (no trailing slash, tries index action, for example).

Conventions:

- CamelCase mapped to underscored names

- URLs should be all lower-case, and use underscore's instead of CamelCase

- Table names are pluralized form of model (post model -> posts table)

- Assume primary key of id, and it auto-increments.

For views, in order to process an action, Rails attempts to look in the views directory, to see if it can find a view that matches the action. If it finds a match, then it will apply the view to the output.

Rails does have a config file, in YAML format, which gets converted into a ruby hash automatically.

Just like generator, there is a companion command, called destroy. Can remove things that you create by mistake, etc.

Rails has automatic (default) model actions for different database types. If it finds a date/time type in the DB, it will automatically generate HTML to allow inputs for that type. Ordering on page corresponds to order of columns in DB table. Scaffolding is just for getting started, because look and feel will always need to be customized. The model queries the database, handling all of the necessary SQL by default. You can still plugin your own SQL, when it is needed. Models are created before making database table.

Need to key in model objects as to "what you mean" (many-to-one, many-to-many, etc). Use foreign keys in DB to do this. Convention is to call foreign key <table_name>_id, so that Rails can find the other table, and correspond it to the model for that table. Also some code in the model. Goal is to get all logic in the model, instead of in the DB. DB is just for storage.

Rails is targeted at greenfield development -- new applications being built from scratch. For example, Rails doesn't like composite primary keys. It can bend to work with legacy DBs, but if they are super-complicated, it won't work.

Can use console script, to interact with domain model in a CLI-type fashion. Can also be used to debug & administer live systems.

Layout: way of reversing responsibility between layout and template. Layout includes other files, so you can change formatting in one place. Inversion-of-control-like? Convention around file names, if file in layout directory shares same name with view, will automatically be used.

Rails has "filters", applied in a chain. Seems conceptually similar to workflow, hence filters in Remedy ARS. Apply filters in the controller class. Have granularity to specify which actions need to run which filters.

Rails has built in logging. Even better, you can access the logger, to do printf-style debugging. Call logger.info to do this.

By default, Rails stores session info in files in /tmp. Can configure it to store session info in DB or in memcache. That will work better for LB-style environments.

You can use inheritance. For example, if you rename layout file to be at application level, then all views will apply it, since they all inherit from application.

Unit tests are built in. Type "rake unit_tests" to run all of the unit tests. Type "rake recent" to test all of the files that have changed in the last 10 minutes. Unit tests good for testing model. What about controllers? There are functional tests for that. Use assert_response in order to make sure you get the response back from the application that you expect.

Includes AJAX helpers: bits of code you can invoke from Ruby, that will generate JavaScript in output to the browser.

Miscellaneous notes:

- session is global hash that you can reference with variables for the user's session.

- rake is make in Ruby.

- breakpoint - super-cool way to debug. Can set it anywhere, stops execution and throws you into console to inspect things.

Tools:

- It looks like David is using TextMate as his text editor.

- iTerm

- CocoaMySQL - GUI for messing with MySQL tables.

OSCON Tutorial: Presentation Aikido with Damian Conway

"The way of harmonizing with the Flow (of the universe)"Presentation isn't about subdoing audience, bending them to hour will, turning their energies against them

- More about connecting with the audience

- Sharing knowledge and ideas for their benefit

- Encouraging them to travel a path with you

- Helping them

- when you speak, they may or may not be giving you attention, respect, etc.

- they are giving you their time (most precious resource)

- recognize it as a gift, start to enjoy it

- be comfortable, confident on stage

- audience doesn't care what saying, only that you are saying well

- talk about topics you genuinely understand, actually use

- talk about real experiences that you have had

- if you can't get out of talk, do research, and admit to audience that it is new to you as well

- the battle is always won beforehand

- adjust languange to audience

- confidence that's reassuring (that material is comprehensible to normal human beings)

- flow that is captivating

- all arranged beforehand

- budget 10 hours per hour of speaking

- Damian does more like 20

- most important decision

- what are you going to talk about? always have a choice about it, even if assigned

- talk about what you care about, passionate about, excited about

- all about attitude

- about enthusiasm

- always trumps informative

- hook 'em at the beginning, before they mentally change channels

- in given time, really only giving overview or sampler

- reassurance -- this material is attainable

- build cognitive framework in mind, that provides framework for understanding

- comprehensible material that resonates with audience

- so they can go and learn details later

- entertaining is the way to do this

- supposed to convey vaguest hint of topic

- engender some sense of intrigue

- try working in a pop reference, those who get it will be smug and on your side

- try to make title short

- state main thesis in first three words, field of interest in last four words

- marketing pitch

- informative, without giving stuff away

- should say that talk is interesting and speaker is excellent

- show it by making interesting and excellently written

- give some sense of structure of talk

- short, easy-to-read, catchy, tantalizing

- register shortage

- structure information hierarchically -- the story

- fundamental point of aikido

- will only remember 5 points, so choose 5 most important things

- find story that fits those 5 points smoothly

- connect to ideas that people already have and understand

- metaphor

- now, ready to begin writing the talk...

- easier to arrange information in word, or text editor, than in presentation software

- outline style, so it translates well to slides

- causal style

- chronological

- layered - drill down, build up; good for when length is variable; can adapt to needs of audience -- can choose when to go deep or stay up

- cumulative -- start simple, and build up

- simple narrative

- flow decides what topics go in, or stay out. if it doesn't re-inforce 5 points, it is extraneous

- where is leap too great for audience?

- need to go back and fill in gaps

- squirt raw material into slides

- fix it

- start deleting words - handout best as proper sentences, slide points don't work as sentences

- this really matters

- coherence -- single visual entity

- harmonious whole

- different styles of presentation are needed for different objectives

- style can be a part of the message

- great secret of persuasion is to not use many words

- short declartive statements, no hedging

- minimize decoration

- can use smaller, bulleted text

- softer hues, etc.

- if you don't have one, don't use MS templates

- thief from apple, cool websites, etc

- reverse-engineer from a cool preso that you say

- almost every slide is too busy

- too much text, too many ideas

- Damian typically runs 120 slides/hr

- use images like seasoning

- better made visually than spoken

- use them in an unexpected manner

- meaningful, occasional

- want to animate a process

- if not easy in powerpoint, too complex

- almost never resort to video

- only if absolutely amazing, and really makes the point

- don't distinguish by color alone (color blindness)

- use luminance instead

- also application for a new font, or draw a luminance box around text to highlight, etc.

- tools for picking color schemes, like how artists can do (PowerPoint in MacOS X has one)

- check under worst case scenario -- set os to go black & white; will make slides that work for people of all visual perception abilities

- side-by-side hard for people to grok

- show transitions, only animate what changes

- break up monotony of talk

- keep audience engaged

- variations in pace and style

- can always come up with more material than there is time -- people try to cram it all in

- people can't absorb all of that stuff

- they will need chance to absorb and digest

- asides, humorous bits, examples, etc. give this needed break

- make sure to intersperse some easier concepts

- can use landings as navigational beacons, to notify audience that new topic is starting

- signpost - common slide (like TOC), that you come back to as you progress, showing topics you have talked about, and what is coming next.

- no-one understands charts

- put it in notes

- break chart down, and explain piece by piece

- same goes for graphs

- the best presentations look effortless

- audience desperately wants the material to seem "easy"

- keys: competence, preparation, practice, organization, style, attitude

- be passionate, connect with audience, give of yourself -- but be self-less

- worst case, read and then give summary that isn't on slide

- secret of timing

- most important

- do it aloud

- great way to show audience what you are talking about

- people visualize better than they hear

- practice

- try and script software demos if at all possible.

- make demo smooth -- hot keys to get to example, and run with one touch