Left Nasa to go to Google, because that is where the data is.

ACL - computational linguistic conference. Percentage of papers that mention statistical/probabilistic concepts - rose from 0 in 1979 to 55% in 2006.

More Data vs. Better algorithms - the more data you have, the better your algorithm will perform, even if it isn't the best.

Size of repositories have been growing - Brown Corpus, 1 million words in 1961. The Internet (from authors) - about 100 trillion words, depends on how you count.

Always new words, grows at linear rate. First found by bell labs examining news ticker, they thought it would level out, never has.

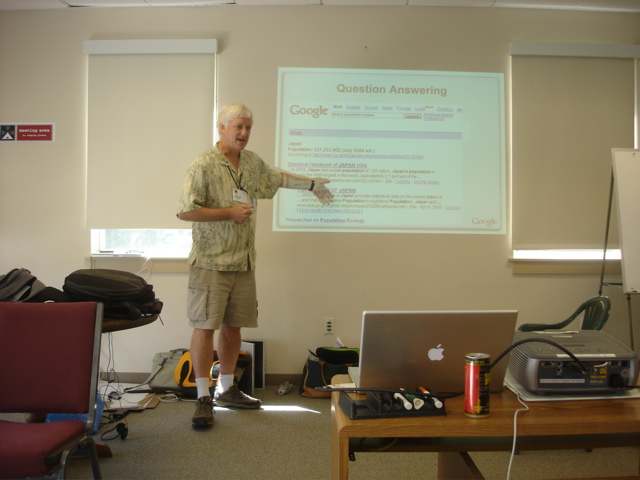

Peter Norvig, talking about the amazing things you can do with lots and lots of data

Construct Google LDC N-Gram corpus - analyses Google's index. Over a trillion words, look at # of sentences (95b), then break down sentence lengths (1 word, 2 words, etc).

Data-Derived models

- Parametric model

- Semi-parametric

- Non-parametric - many inputs, keep all the data around

Google Sets is one of the first things that they did.

One source of data is web data, another is interaction with users (Google Trends).

Spelling correction - they do a corpus based correction, not based on dictionary-based. Corpus based knows what words are on the web, but also which ones go together (great for proper names).

How to extract facts?

- build scrapers for individual sites. Based on regex.

- build general pattern (regex) and look anywhere on the web. Have to do post-processing, you'll get things back that aren't as reliable.

- Learn patterns from examples

- Learn relations from examples

Learning from text (machine reading) - multiple levels, chunk text into pieces (concepts), put concepts into relations, learn patterns that represent those relations. Then you feed this lots of text, and the algorithm finds the top concepts, and the words that are most often associated with them.

Tried to do statistical machine translation - by analyzing parallel texts.

Internet has a body of writers, and a large body of readers. Using data to build some AI techniques, to connect writers to readers.